- ↔

- →

to read (pdf)

- Letting AI Actively Manage Its Own Context | 明天的乌云

- Garden Offices for Sale UK - Portable Space

- Cord: Coordinating Trees of AI Agents | June Kim

- Style tips for less experienced developers coding with AI · honnibal.dev

- Haskell for all: Beyond agentic coding

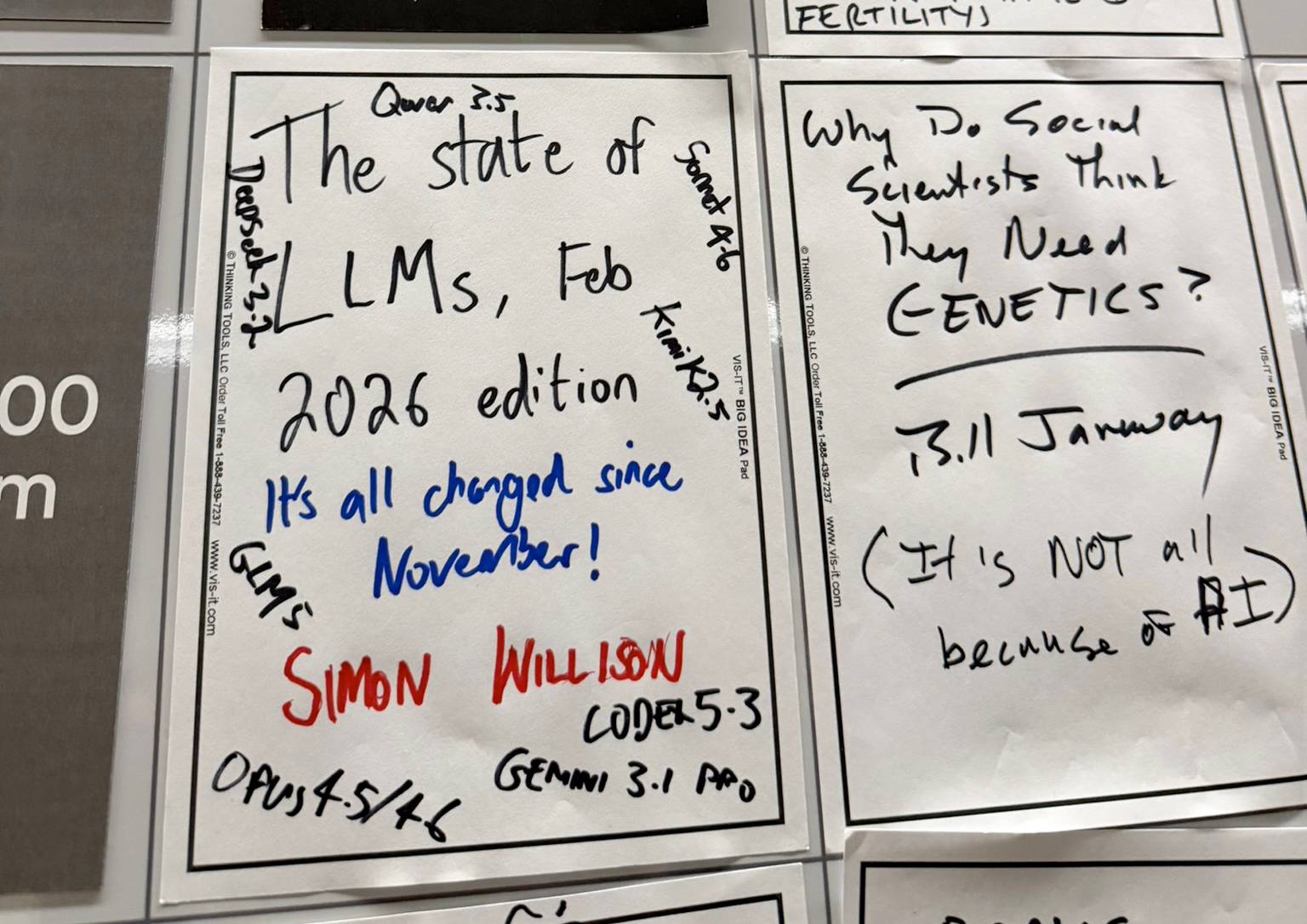

- February 27, 2026

-

🔗 @HexRaysSA@infosec.exchange ❤️ LAST CHANCE for savings you'll love... mastodon

❤️ LAST CHANCE for savings you'll love...

IDA Pro’s 40% OFF* this Valentine's season.Use code LOVE40 by February 28 to get your license to the industry's best, for 40% less: http://hex-rays.com/license-love

*Terms apply.

-

🔗 sacha chua :: living an awesome life Using speech recognition for on-the-fly translations in Emacs and faking in-buffer completion for the results rss

When I'm writing a journal entry in French, I sometimes want to translate a phrase that I can't look up word by word using a dictionary. Instead of switching to a browser, I can use an Emacs function to prompt me for text and either insert or display the translation. The plz library makes HTTP requests slightly neater.

(defun my-french-en-to-fr (text &optional display-only) (interactive (list (read-string "Text: ") current-prefix-arg)) (let* ((url "https://translation.googleapis.com/language/translate/v2") (params `(("key" . ,(getenv "GOOGLE_API_KEY")) ("q" . ,text) ("source" . "en") ("target" . "fr") ("format" . "text"))) (query-string (mapconcat (lambda (pair) (format "%s=%s" (url-hexify-string (car pair)) (url-hexify-string (cdr pair)))) params "&")) (full-url (concat url "?" query-string))) (let* ((response (plz 'get full-url :as #'json-read)) (data (alist-get 'data response)) (translations (alist-get 'translations data)) (first-translation (car translations)) (translated-text (alist-get 'translatedText first-translation))) (when (called-interactively-p 'any) (if display-only (message "%s" translated-text) (insert translated-text))) translated-text)))I think it would be even nicer if I could use speech synthesis, so I can keep it a little more separate from my typing thoughts. I want to be able to say "Okay, translate …" or "Okay, … in French" to get a translation. I've been using my fork of natrys/whisper.el for speech recognition in English, and I like it a lot. By adding a function to

whisper-after-transcription-hook, I can modify the intermediate results before they're inserted into the buffer.(defun my-whisper-translate () (goto-char (point-min)) (let ((case-fold-search t)) (when (re-search-forward "okay[,\\.]? translate[,\\.]? \\(.+\\)\\|okay[,\\.]? \\(.+?\\) in French" nil t) (let* ((s (or (match-string 1) (match-string 2))) (translation (save-match-data (my-french-en-to-fr s)))) (replace-match (propertize translation 'type-hint translation 'help-echo s)))))) (with-eval-after-load 'whisper (add-hook 'whisper-after-transcription-hook 'my-whisper-translate 70))But that's too easy. I want to actually type things myself so that I get more practice. Something like an autocomplete suggestion would be handy as a way of showing me a hint at the cursor. The usual completion-at-point functions are too eager to insert things if there's only one candidate, so we'll just fake it with an overlay. This code works only with my whisper.el fork because it supports using a list of functions for

whisper-insert-text-at-point.(defun my-whisper-maybe-type-with-hints (text) "Add this function to `whisper-insert-text-at-point'." (let ((hint (and text (org-find-text-property-in-string 'type-hint text)))) (if hint (progn (my-type-with-hint hint) nil) text))) (defvar-local my-practice-overlay nil) (defvar-local my-practice-target nil) (defvar-local my-practice-start nil) (defun my-practice-cleanup () "Remove the overlay and stop monitoring." (when (overlayp my-practice-overlay) (delete-overlay my-practice-overlay)) (setq my-practice-overlay nil my-practice-target nil my-practice-start nil) (remove-hook 'post-command-hook #'my-practice-monitor t)) (defun my-practice-monitor () "Updates hint or cancels." (let* ((pos (point)) (input (buffer-substring-no-properties my-practice-start pos)) (input-len (length input)) (target-len (length my-practice-target))) (cond ((or (< pos my-practice-start) (> pos (+ my-practice-start target-len)) (string-match "[\n\t]" input) (string= input my-practice-target)) (my-practice-cleanup)) ((string-prefix-p (downcase input) (downcase my-practice-target)) (let ((remaining (substring my-practice-target input-len))) (move-overlay my-practice-overlay pos pos) (overlay-put my-practice-overlay 'after-string (propertize remaining 'face 'shadow)))) (t ; typo (move-overlay my-practice-overlay pos pos) (overlay-put my-practice-overlay 'after-string (propertize (substring my-practice-target input-len) 'face 'error)))))) (defun my-type-with-hint (string) "Show hints for STRING." (interactive "sString to practice: ") (my-practice-cleanup) (setq-local my-practice-target string) (setq-local my-practice-start (point)) (setq-local my-practice-overlay (make-overlay (point) (point) nil t t)) (overlay-put my-practice-overlay 'after-string (propertize string 'face 'shadow)) (add-hook 'post-command-hook #'my-practice-monitor nil t))Here's a demonstration of me saying "Okay, this is a test, in French.":

Screencast of using speech recognition to translate into French and provide a hint when typingSince we're faking in-buffer completion here, maybe we can still get away with considering this as an entry for Emacs Carnival February 2026: Completion ? =)

This is part of my Emacs configuration.You can e-mail me at sacha@sachachua.com.

-

🔗 r/Harrogate Looking for date location in Harrogate rss

Hi looking for date location like bar, im mid 20s dating slightly younger or my age.

belgrave in Leeds is my sort of place but gets busy and loud, went with flatmate and was so hard to hear them and talk even pretty early at 6pm, the walk there and from was actually better!!

So looking for somewhere quieter kinda cool vibe , not formal or

The ideal place would be something like Monty’s Bar that’s in Shoreditch which has sofas without it functioning as a main function room that belgrave does, more just like a edgy living room vibe and bar without the party

Thanks

submitted by /u/Apprehensive_Ring666

[link] [comments] -

🔗 r/wiesbaden Stau auflösen 2.0 rss

Die Partei hat immer recht.

submitted by /u/Affisaurus

[link] [comments] -

🔗 r/wiesbaden Morgen Plattenladen Pop-up in Mainz ✨🤝 rss

submitted by /u/EmploymentUnique2066

[link] [comments] -

🔗 remorses/critique critique@0.1.119 release

-

Real-time annotations on shared diff pages — collaborators can now leave comments and annotations directly on

critique.workdiff pages via the--webcommand -

Annotation widget auto-loads — the annotation UI loads automatically on web previews with real-time SSE updates as teammates add or resolve annotations

-

Fixed mobile web preview — mobile redirect now preserves scroll position and the annotation overlay renders correctly on small screens

-

-

🔗 blacktop/ida-mcp-rs v0.9.3 release

What's Changed

New Contributors

Full Changelog :

v0.9.2...v0.9.3What's Changed

- Update to IDA 9.3 release by @blacktop in #5

- fix: three correctness bugs (callees dedup, entrypoints hang, xref_matrix array arg) by @cpkt9762 in #6

New Contributors

Full Changelog :

v0.9.2...v0.9.3 -

🔗 r/york Need food ideas?! rss

Hi, I’m wanting to take my partner for a meal in York and wanting some recommendations. Happy to eat most cuisines but there is a seafood/fish allergy. Thank you in advance. If the place has online menus even better.

submitted by /u/Mormagon108

[link] [comments] -

🔗 hyprwm/Hyprland v0.54.0 release

A big (large), actually huge update for y'all!!

Special thanks to our HIs (Human Intelligences) for powering Hyprland development.

New features:

- cmakelists: add fno-omit-frame-pointer for tracy builds

- desktop/window: add stable id and use it for foreign

- gestures: add cursor zoom (#13033)

- groupbar: added group:groupbar:text_padding (#12818)

- hyprctl: add error messages to hyprctl hyprpaper wallpaper (#13234)

- hyprctl: add overFullscreen field in hyprctl window debug (#13066)

- hyprpm: add full nix integration (#13189)

- keybinds: add inhibiting gestures under shortcut inhibitors (#12692)

- main: add watchdog-fd and safe-mode options to help message (#12922)

- opengl: add debug:gl_debugging (#13183)

- start: add --force-nixgl and check /run/opengl-driver (#13385)

- start: add parent-death handling for BSDs (#12863)

Fixes:

- algo/dwindle: fix focal point not being properly used in movedTarget (#13373)

- algo/master: fix master:orientation being a noop

- algo/master: fix orientation cycling (#13372)

- algo/scrolling: fix crashes on destroying ws

- core/compositor: immediately do readable if adding waiter fails for scheduling state

- compositor: fix calculating x11 work area (#13347)

- config/descriptions: fix use_cpu_buffer (#13285)

- core/xwaylandmgr: fix min/max clamp potentially crashing

- decorations/border: fix damage scheduling after #12665

- desktop/layerRuleApplicator: fix an epic c+p fail

- desktop/ls: fix invalid clamp

- desktop/popup: fix use after free in Popup (#13335)

- desktop/reserved: fix a possible reserved crash (#13207)

- desktop/ruleApplicator: fix typo in border color rule parsing (#12995)

- desktop/rules: fix border colors not resetting. (#13382)

- desktop/workspaceHistory: fix tracking for multiple monitors (#12979)

- desktopAnimationMgr: fix slide direction

- dynamicPermManager: fix c+p fail

- eventLoop: various eventloopmgr fixes (#13091)

- example: fixup config for togglesplit

- fifo: miscellaneous fifo fixes (#13136)

- fix: handle fullscreen windows on special workspaces (#12851)

- hyprctl: fix layerrules not being applied dynamically with hyprctl (#13080)

- hyprerror: add padding & adjust for scale when reserving area (#13158)

- hyprerror: fix horizontal overflow and damage box (#12719)

- hyprpm: fix build step execution

- hyprpm: fix clang-format

- input: fix edge grab resize logic for gaps_out > 0 (#13144)

- input: fix kinetic scroll (#13233)

- keybinds: fix unguarded member access in moveWindowOrGroup (#13337)

- mainLoopExecutor: fix incorrect pipe check

- monitor: fix DS deactivation (#13188)

- multigpu: fix multi gpu checking (#13277)

- nix: add hyprland-uwsm to passthru.providedSessions

- nix: fix evaluation warnings, the xorg package set has been deprecated (#13231)

- pluginsystem: fix crash when unloading plugin hyprctl commands (#12821)

- protocols/cm: Fix image description info events (#12781)

- protocols/contentType: fix missing destroy

- protocols/contentType: fix typo in already constructed check

- protocols/dmabuf: fix DMA-BUF checks and events (#12965)

- protocols/syncobj: fix DRM sync obj support logging (#12946)

- renderer/pass: fix surface opaque region bounds used in occluding (#13124)

- renderer: add surface shader variants with less branching and uniforms (#13030)

- renderer: optimise shader usage further, split shaders and add more caching (#12992)

- renderer: fix dgpu directscanout explicit sync (#13229)

- renderer: fix frame sync (#13061)

- renderer: fix mouse motion in VRR (#12665)

- renderer: fix non shader cm reset (#13027)

- renderer: fix screen export back to srgb (#13148)

- systemd/sdDaemon: fix incorrect strnlen

- target: fix geometry for x11 floats

- tester: fix sleeps waiting for too long (#12774)

- xwayland/xwm: fix _NET_WM_STATE_MAXIMIZED_VERT type (#13151)

- xwayland/xwm: fix window closing when props race

- xwayland: fix size mismatch for no scaling (#13263)

Other:

- Nix: apply glaze patch

- Nix: re-enable hyprpm

- Reapply "hyprpm: bump glaze version"

- Revert "hyprpm: bump glaze version"

- algo/scrolling: adjust focus callbacks to be more intuitive

- animation: reset tick state on session activation (#13024)

- animationMgr: avoid uaf in ::tick() if handleUpdate destroys AV

- anr: open anr dialog on parent's workspace (#12509)

- anr: remove window on closewindow (#13007)

- buffer: add move constructor and operator to CHLBufferReference (#13157)

- cm: block DS for scRGB in HDR mode (#13262)

- cmake: bump wayland-server version to 1.22.91 (#13242)

- cmake: use OpenGL::GLES3 when OpenGL::GL does not exist (#13260)

- cmakelists: don't require debug for tracy

- compositor: guard null view() in getWindowFromSurface (#13255)

- config: don't crash on permission with a config check

- config: return windowrulev2 layerrulev2 error messages (#12847)

- config: support no_vrr rule on vrr 1 (#13250)

- core: optimize some common branches

- decoration: take desiredExtents on all sides into account (#12935)

- dekstop/window: read static rules before guessing initial size if possible (#12783)

- desktop/LS: avoid creating an invalid LS if no monitor could be found (#12787)

- desktop/ls: clamp layer from protocol

- desktop/popup: avoid crash on null popup child in rechecking

- desktop/popup: only remove reserved for window popups

- desktop/reservedArea: clamp dynamic types to 0

- desktop/reservedArea: clamp to 0

- desktop/rules: use pid for exec rules (#13374)

- desktop/window: avoid uaf on instant removal of a window

- desktop/window: catch bad any cast tokens

- desktop/window: go back to the previously focused window in a group (#12763)

- desktop/window: remove old fn defs

- desktop/window: track explicit workspace assignments to prevent X11 configure overwrites (#12850)

- desktop/window: use workArea for idealBB (#12802)

- desktop/windowRule: allow expression in min_size/max_size (#12977)

- desktop/windowRule: use content rule as enum directly (#13275)

- desktop: restore invisible floating window alpha/opacity when focused over fullscreen (#12994)

- event: refactor HookSystem into a typed event bus (#13333)

- eventLoop: remove failed readable waiters

- framebuffer: revert viewport (#12842)

- gestures/fs: remove unneeded floating state switch (#13127)

- hyprctl: adjust json case

- hyprctl: bump hyprpaper protocol to rev 2 (#12838)

- hyprctl: remove trailing comma from json object (#13042)

- hyprerror: clear reserved area on destroy (#13046)

- hyprpm,Makefile: drop cmake ninja build

- hyprpm: bump glaze version

- hyprpm: drop meson dep

- hyprpm: exclude glaze from all targets during fetch

- hyprpm: use provided pkgconf env if available

- i18n: add Romanian translations (#13075)

- i18n: add Traditional Chinese (zh_TW) translations (#13210)

- i18n: add Vietnamese translation (#13163)

- i18n: add bengali translations (#13185)

- i18n: update russian translation (#13247)

- input/TI: avoid UAF in destroy

- input/ti: avoid sending events to inactive TIs

- input: guard null

view()when processing mouse down (#12772) - input: use fresh cursor pos when sending motion events (#13366)

- internal: removed Herobrine

- layershell: restore focus to layer shell surface after popup is destroyed (#13225)

- layout: rethonk layouts from the ground up (#12890)

- monitor: revert "remove disconnected monitor before unsafe state #12544" (#13154)

- nix: remove glaze patch

- opengl/fb: use GL_DEPTH24_STENCIL8 instead of GL_STENCIL_INDEX8 (#13067)

- opengl: allow texture filter to be changed (#13078)

- opengl: set EGL_CONTEXT_RELEASE_BEHAVIOR_KHR if supported (#13114)

- pointermgr: damage only the surface size (#13284)

- pointermgr: remove onRenderBufferDestroy (#13008)

- pointermgr: revert "damage only the surface size (#13284)"

- popup: check for expired weak ptr (#13352)

- popup: reposition with reserved taken into account

- proto/shm: update wl_shm to v2 (#13187)

- protocolMgr: remove IME / virtual input protocols from sandbox whitelist

- protocols/toplevelExport: Support transparency in toplevel export (#12824)

- protocols: implement image-capture-source-v1 and image-copy-capture-v1 (#11709)

- renderer/fb: dont forget to set m_drmFormat (#12833)

- renderer/gl: add internal gl formats and reduce internal driver format conversions (#12879)

- renderer/opengl: invalidate intermediate FBs post render, avoid stencil if possible (#12848)

- renderer: allow tearing with DS with invisible cursors (#13155)

- renderer: better sdr eotf settings (#12812)

- renderer: minor framebuffer and renderbuffer changes (#12831)

- renderer: shader code refactor (#12926)

- shm: ensure we use right gl unpack alignment (#12975)

- start: use nixGL if Hyprland is nix but not NixOS (#12845)

- systemd/sdDaemon: initialize sockaddr_un

- testers: add missing #include

(#12862) - tests: Test the

no_focus_on_activatewindow rule (#13015) - time: ensure type correctness and calculate nsec correctly (#13167)

- versionKeeper: ignore minor rev version

- view: send wl_surface.enter to subsurfaces of popups (#13353)

- wayland/output: return all bound wl_output instances in outputResourceFrom (#13315)

- welcome: skip in safe mode

- xwayland/xwm: get supported props on constructing surface (#13156)

- xwayland/xwm: handle INCR clipboard transfer chunks correctly (#13125)

- xwayland/xwm: prevent onWrite infinite loop and clean orphan transfers (#13122)

- xwayland: ensure NO_XWAYLAND builds (#13160)

- xwayland: normalize OR geometry to logical coords with force_zero_scaling (#13359)

- xwayland: validate size hints before floating (#13361)

Special thanks

As always, massive thanks to our wonderful donators and sponsors:

Sponsors

Diamond

37Signals

Gold

Framework

Donators

Top Supporters:

Seishin, Kay, johndoe42, d, vmfunc, Theory_Lukas, --, MasterHowToLearn, iain, ari-cake, TyrHeimdal, alexmanman5, MadCatX, Xoores, inittux111, RaymondLC92, Insprill, John Shelburne, Illyan, Jas Singh, Joshua Weaver, miget.com, Tonao Paneguini, Brandon Wang, Arkevius, Semtex, Snorezor, ExBhal, alukortti, lzieniew, taigrr, 3RM, DHH, Hunter Wesson, Sierra Layla Vithica, soy_3l.beantser, Anon2033, Tom94

New Monthly Supporters:

monkeypost, lorenzhawkes, Adam Saudagar, Donovan Young, SpoderMouse, prafesa, b3st1m0s, CaptainShwah, Mozart409, bernd, dingo, Marc Galbraith, Mongoss, .tweep, x-wilk, Yngviwarr, moonshiner113, Dani Moreira, Nathan LeSueur, Chimal, edgarsilva, NachoAz, mo, McRealz, wrkshpstudio, crutonjohn

One-time Donators:

macsek, kxwm, Bex Jonathan, Alex, Tomas Kirkegaard, Viacheslav Demushkin, Clive, phil, luxxa, peterjs, tetamusha, pallavk, michaelsx, LichHunter, fratervital, Marpin, SxK, mglvsky, Pembo, Priyav Shah, ChazBeaver, Kim, JonGoogle, matt p, tim, ybaroj, Mr. Monet Baches, NoX, knurreleif, bosnaufal, Alex Vera, fathulk, nh3, Peter, Charles Silva, Tyvren, BI0L0G0S, fonte-della- bonitate, Alex Paterson, Ar, sK0pe, criss, Dnehring, Justin, hylk, 邱國玉KoryChiu, KSzykula, Loutci, jgarzadi, vladzapp, TonyDuan, Brian Starke, Jacobrale, Arvet, Jim C, frank2108, Bat-fox, M.Bergsprekken, sh-r0, Emmerich, davzucky, 3speed, 7KiLL, nu11p7r, Douglas Thomas, Ross, Dave Dashefsky, gignom, Androlax, Dakota, soup, Mac, Quiaro, bittersweet, earthian, Benedict Sonntag, Plockn, Palmen, SD, CyanideData, Spencer Flagg, davide, ashirsc, ddubs, dahol, C. Willard A.K.A Skubaaa, ddollar, Kelvin, Gwynspring, Richard, Zoltán, FirstKix, Zeux, CodeTex, shoedler, brk, Ben Damman, Nils Melchert, Ekoban, D., istoleyurballs , gaKz, ComputerPone, Cell the Führer, defaltastra, Vex, Bulletcharm, cosmincartas, Eccomi, vsa, YvesCB, mmsaf, JonathanHart, Sean Hogge, leat bear, Arizon, JohannesChristel, Darmock, Olivier, Mehran, Anon, Trevvvvvvvvvvvvvvvvvvvv, C8H10N4O2, BeNe, Ko-fi Supporter :3, brad, rzsombor, Faustian, Jemmer, Antonio Sanguigni, woozee, Bluudek, chonaldo, LP, Spanching, Armin, BarbaPeru, Rockey, soba, FalconOne, eizengan, むらびと, zanneth, 0xk1f0, Luccz, Shailesh Kanojia, ForgeWork , Richard Nunez, keith@groupdigital.com, pinklizzy, win_cat_define, Bill, johhnry, Matysek, anonymus, github.com/wh1le, Iiro Ullin, Filinto Delgado, badoken, Simon Brundin, Ethan, Theo Puranen Åhfeldt, PoorProgrammer, lukas0008, Paweł S, Vandroiy, Mathias Brännström, Happyelkk, zerocool823, Bryan, ralph_wiggums, DNA, skatos24, Darogirn , Hidde, phlay, lindolo25, Siege, Gus, Max, John Chukwuma, Loopy, Ben, PJ, mick, herakles, mikeU-1F45F, Ammanas, SeanGriffin, Artsiom, Erick, Marko, Ricky, Vincent mouline

Full Changelog :

v0.53.0...v0.54.0 -

🔗 r/york A wander around the streets.. rss

| submitted by /u/OpportunityNearby827

| submitted by /u/OpportunityNearby827

[link] [comments]

---|--- -

🔗 News Minimalist 🐢 Pakistan and Afghanistan at war + 10 more stories rss

In the last 3 days ChatGPT read 94301 top news stories. After removing previously covered events, there are 11 articles with a significance score over 5.5.

[6.2] Afghanistan and Pakistan engage in open war following cross-border attacks —abcnews.com(+215)

Pakistan’s defense minister declared an “open war” with Afghanistan on Friday following a significant escalation in cross-border military strikes, marking the most serious confrontation between the neighbors in years.

Following Afghan cross-border attacks Thursday, Pakistan launched retaliatory airstrikes on Kabul and Kandahar. Tensions have peaked over Pakistan’s claims that Afghanistan harbors TTP militants, an allegation Kabul denies while citing civilian casualties from recent Pakistani military operations along the porous frontier.

[6.3] Pentagon demands unrestricted military access to Anthropic's AI or risks contract loss —apnews.com(+100)

Defense Secretary Pete Hegseth gave Anthropic a Friday deadline to allow unrestricted military use of its AI or risk losing government contracts and facing Defense Production Act intervention.

Defense officials suggested invoking the Cold War-era Defense Production Act to bypass Anthropic's ethical restrictions. This unprecedented move aims to integrate Claude AI into military networks despite leadership concerns regarding autonomous weaponry, mass surveillance, and safety limits for artificial intelligence.

While the DPA historically boosts production during emergencies like pandemics, experts warn that using it to dictate service terms is legally questionable and could trigger significant litigation between Anthropic and the government.

[5.5] EU expands funding for abortion access within the bloc —apnews.com(+24)

The European Commission has authorized using the 147 billion euro European Social Funds Plus to support citizens traveling to access safe abortions from EU nations with restrictive health laws.

This decision follows the My Voice, My Choice campaign, which gathered over one million signatures via the European Citizens’ Initiative. While no new fund was created, the Commission confirmed that existing resources can defray costs for women seeking legal healthcare across borders.

Although abortion is legal in most of Europe, it remains highly restricted in countries like Poland and Malta. Proponents call the move a victory for social justice, while some critics oppose the intervention.

Highly covered news with significance over 5.5

[6.0] Chip giant Nvidia defies AI concerns with record $215bn revenue — bbc.com (+138)

[6.5] World-first stem cell therapy trial shows promise for treating spina bifida in the womb — nature.com (+6)

[5.9] France's National Assembly approves assisted dying bill — lalibre.be (French) (+7)

[5.9] Chinese scientists transform desert sand into fertile soil using microbes — en.tempo.co (+2)

[5.7] Novartis settles with Henrietta Lacks' estate over use of her cells — independent.co.uk (+10)

[5.6] Germany's ruling parties reverse heat pump mandate, allowing homeowner choice — nzz.ch (German) (+9)

[5.5] UK's first geothermal power plant generates electricity for 10,000 homes and produces lithium — bbc.com (+2)

[5.5] Chilean telescope captures detailed view of Milky Way's star-forming core — apnews.com (+33)

Thanks for reading!

— Vadim

You can customize this newsletter with premium.

-

🔗 r/wiesbaden essbare Blumen rss

Hat jemand eine Idee, wo ich in Wiesbaden essbare Blumen kaufen kann, um einen Kuchen zu dekorieren? :)

submitted by /u/Turbulent_Life_5826

[link] [comments] -

🔗 r/Yorkshire Jake Lambert will be headlining a comedy evening at The Glee Club Leeds on 10th June, in aid of Epilepsy Action! rss

| submitted by /u/NationalDoodleDay

| submitted by /u/NationalDoodleDay

[link] [comments]

---|--- -

🔗 3Blue1Brown (YouTube) The most beautiful formula not enough people understand rss

On the volumes of higher-dimensional spheres Explore the 3b1b virtual career fair: See https://3b1b.co/talent Become a supporter for early views of new videos: https://3b1b.co/support An equally valuable form of support is to simply share the videos. Home page: https://www.3blue1brown.com

Thanks to UC Santa Cruz for letting me film there, and special thanks to Pedro Morales-Almazan for arranging everything.

My video on Numberphile with a fun application of this problem: https://youtu.be/6_yU9eJ0NxA

Timestamps: 0:00 - Introduction 1:01 - Random puzzle 6:16 - Outside the box 14:35 - Setting up the volume grid 21:14 - Why 4πr^2 25:21 - Archimedes in higher dimensions 36:17 - The general formula 40:40 - 1/2 factorial 44:58 - Why 5D spheres are the biggest 50:16 - Concentration at the surface 54:27 - A unit-free interpretation 57:50 - 3b1b Talent 59:13 - Explaining the intro animation

These animations are largely made using a custom Python library, manim. See the FAQ comments here: https://3b1b.co/faq#manim

Music by Vincent Rubinetti. https://vincerubinetti.bandcamp.com/album/the-music-of-3blue1brown https://open.spotify.com/album/1dVyjwS8FBqXhRunaG5W5u

3blue1brown is a channel about animating math, in all senses of the word animate. If you're reading the bottom of a video description, I'm guessing you're more interested than the average viewer in lessons here. It would mean a lot to me if you chose to stay up to date on new ones, either by subscribing here on YouTube or otherwise following on whichever platform below you check most regularly.

Mailing list: https://3blue1brown.substack.com Twitter: https://twitter.com/3blue1brown Bluesky: https://bsky.app/profile/3blue1brown.com Instagram: https://www.instagram.com/3blue1brown Reddit: https://www.reddit.com/r/3blue1brown Facebook: https://www.facebook.com/3blue1brown Patreon: https://patreon.com/3blue1brown Website: https://www.3blue1brown.com

-

🔗 r/LocalLLaMA PewDiePie fine-tuned Qwen2.5-Coder-32B to beat ChatGPT 4o on coding benchmarks. rss

| submitted by /u/hedgehog0

| submitted by /u/hedgehog0

[link] [comments]

---|--- -

🔗 r/Harrogate New Royal Hunting Forest exhibition in Knaresborough rss

submitted by /u/No_Nose_3849

[link] [comments] -

🔗 r/Yorkshire Whitby against racism rss

| submitted by /u/johnsmithoncemore

| submitted by /u/johnsmithoncemore

[link] [comments]

---|--- -

🔗 r/york Hidden gems to explore in York rss

| Reddit tricked me into reading an AI-generated page about hidden gems in York. I'm pleased to report that everyone's favourite cafe has made the list! See the screenshot below. Reddit cites u/WhapXI's comment and u/WhatWeHavingForTea's comment as evidence. I would like to compliment the AI on its refined taste in cafes. https://preview.redd.it/v4yf71es11mg1.png?width=865&format=png&auto=webp&s=0df8d86bbac10bb61f12d53de2e64b9921681f8e See for yourself here: https://www.reddit.com/answers/7d474f44-7294-4c6f-ad77-e40716ed14f8/?q=Hidden+gems+to+explore+in+York&source=PDP&tl=en . submitted by /u/sbernard

| Reddit tricked me into reading an AI-generated page about hidden gems in York. I'm pleased to report that everyone's favourite cafe has made the list! See the screenshot below. Reddit cites u/WhapXI's comment and u/WhatWeHavingForTea's comment as evidence. I would like to compliment the AI on its refined taste in cafes. https://preview.redd.it/v4yf71es11mg1.png?width=865&format=png&auto=webp&s=0df8d86bbac10bb61f12d53de2e64b9921681f8e See for yourself here: https://www.reddit.com/answers/7d474f44-7294-4c6f-ad77-e40716ed14f8/?q=Hidden+gems+to+explore+in+York&source=PDP&tl=en . submitted by /u/sbernard

[link] [comments]

---|--- -

🔗 r/reverseengineering magisk-renef — Auto-run renef dynamic instrumentation server on Android via Magisk/KernelSU rss

submitted by /u/ResponsiblePlant8874

[link] [comments] -

🔗 r/york Some photos I took of your beautiful city last week! rss

| Went to York on holiday last week to see prima facie and took some photos. I had the best time and everyone was super nice, definitely coming back sometime:) submitted by /u/Organic_Repair8717

| Went to York on holiday last week to see prima facie and took some photos. I had the best time and everyone was super nice, definitely coming back sometime:) submitted by /u/Organic_Repair8717

[link] [comments]

---|--- -

🔗 r/Leeds Bra fitting rss

I’ve been debating to get a proper bra fitting before I buy new but idk where is best in the Leeds city centre to get fitted like there’s few bra shops there pour moi , Ann summers and bravissimo all I know of unless there is more I wanted to know where is best to get decent fitted

submitted by /u/Trashbandit_seal

[link] [comments] -

🔗 r/Yorkshire Fishlake, St Cuthbert, South Yorkshire The 12thC south doorway, with four orders of sculpture, is one of Yorkshire’s finest examples of a Romanesque door. rss

| @simonsmith submitted by /u/Mundane-Temporary426

| @simonsmith submitted by /u/Mundane-Temporary426

[link] [comments]

---|--- -

🔗 r/Leeds Mitski show rss

Hello all,

I've got the tickets for the mistki show happening in Leeds in May. I know it's still some time but i was wondering if anyone else has also got the tickets and looking to go together?

I dont live in Leeds anymore and none of my friends listen to Mistki so it would be nice to go with someone and enjoy the show together.

Thanks!

submitted by /u/benzene_burner33

[link] [comments] -

🔗 r/reverseengineering Building a map tool for Cataclismo rss

submitted by /u/Bobby_Bonsaimind

[link] [comments] -

🔗 Stavros' Stuff Latest Posts I made a voice note taker rss

It's small and tiny and so cuteHave you ever always wanted a very very small voice note recorder that would fit in your pocket? Something that would always work, and always be available to take a note at the touch of a button, with no fuss? Me neither.

Until, that is, I saw the Pebble Index 01, then I absolutely needed it right away and had to have it in my life immediately, but alas, it is not available, plus it’s disposable, and I don’t like creating e-waste. What was a poor maker like me supposed to do when struck down so cruelly by the vicissitudes of fate?

There was only one thing I could do:

I could build my own, shitty version of it for $8, and that’s exactly what I did.

The problem

Like everyone else, I have some sort of undiagnosed ADHD, which manifests itself as my brain itching for a specific task, and the itch becoming unbearable unless I scratch it. This usually results in me getting my

-

🔗 Baby Steps How Dada enables internal references rss

In my previous Dada blog post, I talked about how Dada enables composable sharing. Today I'm going to start diving into Dada's permission system; permissions are Dada's equivalent to Rust's borrow checker.

Goal: richer, place-based permissions

Dada aims to exceed Rust's capabilities by using place-based permissions. Dada lets you write functions and types that capture both a value and things borrowed from that value.

As a fun example, imagine you are writing some Rust code to process a comma- separated list, just looking for entries of length 5 or more:

let list: String = format!("...something big, with commas..."); let items: Vec<&str> = list .split(",") .map(|s| s.trim()) // strip whitespace .filter(|s| s.len() > 5) .collect();One of the cool things about Rust is how this code looks a lot like some high- level language like Python or JavaScript, but in those languages the

splitcall is going to be doing a lot of work, since it will have to allocate tons of small strings, copying out the data. But in Rust the&strvalues are just pointers into the original string and sosplitis very cheap. I love this.On the other hand, suppose you want to package up some of those values, along with the backing string, and send them to another thread to be processed. You might think you can just make a struct like so…

struct Message { list: String, items: Vec<&str>, // ---- // goal is to hold a reference // to strings from list }…and then create the list and items and store them into it:

let list: String = format!("...something big, with commas..."); let items: Vec<&str> = /* as before */; let message = Message { list, items }; // ---- // | // This *moves* `list` into the struct. // That in turn invalidates `items`, which // is borrowed from `list`, so there is no // way to construct `Message`.But as experienced Rustaceans know, this will not work. When you have borrowed data like an

&str, that data cannot be moved. If you want to handle a case like this, you need to convert from&strinto sending indices, owned strings, or some other solution. Argh!Dada's permissions use places , not lifetimes

Dada does things a bit differently. The first thing is that, when you create a reference, the resulting type names the place that the data was borrowed from , not the lifetime of the reference. So the type annotation for

itemswould sayref[list] String1 (at least, if you wanted to write out the full details rather than leaving it to the type inferencer):let list: given String = "...something big, with commas..." let items: given Vec[ref[list] String] = list .split(",") .map(_.trim()) // strip whitespace .filter(_.len() > 5) // ------- I *think* this is the syntax I want for closures? // I forget what I had in mind, it's not implemented. .collect()I've blogged before about how I would like to redefine lifetimes in Rust to be places as I feel that a type like

ref[list] Stringis much easier to teach and explain: instead of having to explain that a lifetime references some part of the code, or what have you, you can say that "this is aStringthat references the variablelist".But what's also cool is that named places open the door to more flexible borrows. In Dada, if you wanted to package up the list and the items, you could build a

Messagetype like so:class Message( list: String items: Vec[ref[self.list] String] // --------- // Borrowed from another field! ) // As before: let list: String = "...something big, with commas..." let items: Vec[ref[list] String] = list .split(",") .map(_.strip()) // strip whitespace .filter(_.len() > 5) .collect() // Create the message, this is the fun part! let message = Message(list.give, items.give)Note that last line -

Message(list.give, items.give). We can create a new class and movelistinto it along withitems, which borrows from list. Neat, right?OK, so let's back up and talk about how this all works.

References in Dada are the default

Let's start with syntax. Before we tackle the

Messageexample, I want to go back to theCharacterexample from previous posts, because it's a bit easier for explanatory purposes. Here is some Rust code that declares a structCharacter, creates an owned copy of it, and then gets a few references into it.struct Character { name: String, class: String, hp: u32, } let ch: Character = Character { name: format!("Ferris"), class: format!("Rustacean"), hp: 22 }; let p: &Character = &ch; let q: &String = &p.name;The Dada equivalent to this code is as follows:

class Character( name: String, klass: String, hp: u32, ) let ch: Character = Character("Tzara", "Dadaist", 22) let p: ref[ch] Character = ch let q: ref[p] String = p.nameThe first thing to note is that, in Dada, the default when you name a variable or a place is to create a reference. So

let p = chdoesn't movech, as it would in Rust, it creates a reference to theCharacterstored inch. You could also explicitly writelet p = ch.ref, but that is not preferred. Similarly,let q = p.namecreates a reference to the value in the fieldname. (If you wanted to move the character, you would writelet ch2 = ch.give, notlet ch2 = chas in Rust.)Notice that I said

let p = ch"creates a reference to theCharacterstored inch". In particular, I did not say "creates a reference toch". That's a subtle choice of wording, but it has big implications.References in Dada are not pointers

The reason I wrote that

let p = ch"creates a reference to theCharacterstored inch" and not "creates a reference toch" is because, in Dada, references are not pointers. Rather, they are shallow copies of the value, very much like how we saw in the previous post that ashared Characteracts like anArc<Character>but is represented as a shallow copy.So where in Rust the following code…

let ch = Character { ... }; let p = &ch; let q = &ch.name;…looks like this in memory…

# Rust memory representation Stack Heap ───── ──── ┌───► ch: Character { │ ┌───► name: String { │ │ buffer: ───────────► "Ferris" │ │ length: 6 │ │ capacity: 12 │ │ }, │ │ ... │ │ } │ │ └──── p │ └── qin Dada, code like this

let ch = Character(...) let p = ch let q = ch.namewould look like so

# Dada memory representation Stack Heap ───── ──── ch: Character { name: String { buffer: ───────┬───► "Ferris" length: 6 │ capacity: 12 │ }, │ .. │ } │ │ p: Character { │ name: String { │ buffer: ───────┤ length: 6 │ capacity: 12 │ ... │ } │ } │ │ q: String { │ buffer: ───────────────┘ length: 6 capacity: 12 }Clearly, the Dada representation takes up more memory on the stack. But note that it doesn 't duplicate the memory in the heap, which tends to be where the vast majority of the data is found.

Dada talks about values not references

This gets at something important. Rust, like C, makes pointers first-class. So given

x: &String,xrefers to the pointer and*xrefers to its referent, theString.Dada, like Java, goes another way.

x: ref Stringis aStringvalue - including in memory representation! The difference between agiven String,shared String, andref Stringis not in their memory layout, all of them are the same, but they differ in whether they own their contents.2So in Dada, there is no

*xoperation to go from "pointer" to "referent". That doesn't make sense. Your variable always contains a string, but the permissions you have to use that string will change.In fact, the goal is that people don 't have to learn the memory representation as they learn Dada, you are supposed to be able to think of Dada variables as if they were all objects on the heap, just like in Java or Python, even though in fact they are stored on the stack.3

Rust does not permit moves of borrowed data

In Rust, you cannot move values while they are borrowed. So if you have code like this that moves

chintoch1…let ch = Character { ... }; let name = &ch.name; // create reference let ch1 = ch; // moves `ch`…then this code only compiles if

nameis not used again:let ch = Character { ... }; let name = &ch.name; // create reference let ch1 = ch; // ERROR: cannot move while borrowed let name1 = name; // use reference again…but Dada can

There are two reasons that Rust forbids moves of borrowed data:

- References are pointers, so those pointers may become invalidated. In the example above,

namepoints to the stack slot forch, so ifchwere to be moved intoch1, that makes the reference invalid. - The type system would lose track of things. Internally, the Rust borrow checker has a kind of "indirection". It knows that

chis borrowed for some span of the code (a "lifetime"), and it knows that the lifetime in the type ofnameis related to that lifetime, but it doesn't really know thatnameis borrowed fromchin particular.4

Neither of these apply to Dada:

- Because references are not pointers into the stack, but rather shallow copies, moving the borrowed value doesn't invalidate their contents. They remain valid.

- Because Dada's types reference actual variable names, we can modify them to reflect moves.

Dada tracks moves in its types

OK, let's revisit that Rust example that was giving us an error. When we convert it to Dada, we find that it type checks just fine:

class Character(...) // as before let ch: given Character = Character(...) let name: ref[ch.name] String = ch.name // -- originally it was borrowed from `ch` let ch1 = ch.give // ------- but `ch` was moved to `ch1` let name1: ref[ch1.name] = name // --- now it is borrowed from `ch1`Woah, neat! We can see that when we move from

chintoch1, the compiler updates the types of the variables around it. So actually the type ofnamechanges toref[ch1.name] String. And then when we move fromnametoname1, that's totally valid.In PL land, updating the type of a variable from one thing to another is called a "strong update". Obviously things can get a bit complicated when control-flow is involved, e.g., in a situation like this:

let ch = Character(...) let ch1 = Character(...) let name = ch.name if some_condition_is_true() { // On this path, the type of `name` changes // to `ref[ch1.name] String`, and so `ch` // is no longer considered borrowed. ch1 = ch.give ch = Character(...) // not borrowed, we can mutate } else { // On this path, the type of `name` // remains unchanged, and `ch` is borrowed. } // Here, the types are merged, so the // type of `name` is `ref[ch.name, ch1.name] String`. // Therefore, `ch` is considered borrowed here.Renaming lets us call functions with borrowed values

OK, let's take the next step. Let's define a Dada function that takes an owned value and another value borrowed from it, like the name, and then call it:

fn character_and_name( ch1: given Character, name1: ref[ch1] String, ) { // ... does something ... }We could call this function like so, as you might expect:

let ch = Character(...) let name = ch.name character_and_name(ch.give, name)So…how does this work? Internally, the type checker type-checks a function call by creating a simpler snippet of code, essentially, and then type- checking that. It's like desugaring but only at type-check time. In this simpler snippet, there are a series of

letstatements to create temporary variables for each argument. These temporaries always have an explicit type taken from the method signature, and they are initialized with the values of each argument:// type checker "desugars" `character_and_name(ch.give, name)` // into more primitive operations: let tmp1: given Character = ch.give // --------------- ------- // | taken from the call // taken from fn sig let tmp2: ref[tmp1.name] String = name // --------------------- ---- // | taken from the call // taken from fn sig, // but rewritten to use the new // temporariesIf this type checks, then the type checker knows you have supplied values of the required types, and so this is a valid call. Of course there are a few more steps, but that's the basic idea.

Notice what happens if you supply data borrowed from the wrong place:

let ch = Character(...) let ch1 = Character(...) character_and_name(ch, ch1.name) // --- wrong place!This will fail to type check because you get:

let tmp1: given Character = ch.give let tmp2: ref[tmp1.name] String = ch1.name // -------- // has type `ref[ch1.name] String`, // not `ref[tmp1.name] String`Class constructors are "just" special functions

So now, if we go all the way back to our original example, we can see how the

Messageexample worked:class Message( list: String items: Vec[ref[self.list] String] )Basically, when you construct a

Message(list, items), that's "just another function call" from the type system's perspective, except thatselfin the signature is handled carefully.This is modeled, not implemented

I should be clear, this system is modeled in the dada- model repository, which implements a kind of "mini Dada" that captures what I believe to be the most interesting bits. I'm working on fleshing out that model a bit more, but it's got most of what I showed you here.5 For example, here is a test that you get an error when you give a reference to the wrong value.

The "real implementation" is lagging quite a bit, and doesn't really handle the interesting bits yet. Scaling it up from model to real implementation involves solving type inference and some other thorny challenges, and I haven't gotten there yet - though I have some pretty interesting experiments going on there too, in terms of the compiler architecture.6

This could apply to Rust

I believe we could apply most of this system to Rust. Obviously we'd have to rework the borrow checker to be based on places, but that's the straight- forward part. The harder bit is the fact that

&Tis a pointer in Rust, and that we cannot readily change. However, for many use cases of self-references, this isn't as important as it sounds. Often, the data you wish to reference is living in the heap, and so the pointer isn't actually invalidated when the original value is moved.Consider our opening example. You might imagine Rust allowing something like this in Rust:

struct Message { list: String, items: Vec<&{self.list} str>, }In this case, the

strdata is heap-allocated, so moving the string doesn't actually invalidate the&strvalue (it would invalidate an&Stringvalue, interestingly).In Rust today, the compiler doesn't know all the details of what's going on.

Stringhas aDerefimpl and so it's quite opaque whetherstris heap- allocated or not. But we are working on various changes to this system in the Beyond the&goal, most notably the Field Projections work. There is likely some opportunity to address this in that context, though to be honest I'm behind in catching up on the details.

-

I'll note in passing that Dada unifies

strandStringinto one type as well. I'll talk in detail about how that works in a future blog post. ↩︎ -

This is kind of like C++ references (e.g.,

String&), which also act "as if" they were a value (i.e., you writes.foo(), nots->foo()), but a C++ reference is truly a pointer, unlike a Dada ref. ↩︎ -

This goal was in part inspired by a conversation I had early on within Amazon, where a (quite experienced) developer told me, "It took me months to understand what variables are in Rust". ↩︎

-

I explained this some years back in a talk on Polonius at Rust Belt Rust, if you'd like more detail. ↩︎

-

No closures or iterator chains! ↩︎

-

As a teaser, I'm building it in async Rust, where each inference variable is a "future" and use "await" to find out when other parts of the code might have added constraints. ↩︎

- References are pointers, so those pointers may become invalidated. In the example above,

-

- February 26, 2026

-

🔗 IDA Plugin Updates IDA Plugin Updates on 2026-02-26 rss

IDA Plugin Updates on 2026-02-26

New Releases:

Activity:

- bitopt

- b25b2adc: chore: bump plugin version in metadata

- capa

- DeepExtractIDA

- ida-domain

- e32528a6: Enable tests also for ida 9.3 (#46)

- ida-function-string-associate

- ida-hcli

- msc-thesis-LLMs-to-rank-decompilers

- 352daad9: update

- pdb

- playlist

- e6a3a01c: skill differential

- python-elpida_core.py

- 25f37bd5: fix: add crystallization_hub + diplomatic_handshake to Dockerfile COPY

- 3a790123: fix: D0 frozen core becomes witness/observer of D11 synthesis

- df96dfb9: fix: Gemini model strings — no new key needed, model names changed

- 77f4c456: Section 23: Oracle cross-framework coordinator + 27-axiom federation …

- 2f0db308: Checkpoint Section 22: Qwen lost-code recovery

- aa8a4bbc: Update CHECKPOINT_MARCH1: Wave 3 results + battery test synthesis + 7…

- 923ad3e3: Wave 3 complete: syntactic-intent evasion validated + EEE + living ax…

- 6280c63d: Wave 3: automated minimal-rephrasing execution script

- 21fb382d: fix: WorldEmitter — filter test entries + S3-backed watermark

- tenrec

- dadc7dde: Merge pull request #25 from nonetype/feat/main-thread-executor

- vdump

- 9c589dad: Minor changes

- bitopt

-

🔗 @binaryninja@infosec.exchange Do you even lift? Because Glenn does -- and he'll walk you through the steps mastodon

Do you even lift? Because Glenn does -- and he'll walk you through the steps so you can get decompilation by implementing lifting for your customer architectures in Binary Ninja in part 2/3 of our architecture guide:

-

🔗 r/york Micklegate filming rss

Not a huge inconvenience but not being able to walk up just now without there having been any signage in advance has been a pain - I've just finished a really long shift so the added detour wasn't hugely appreciated - I'd have planned a different route home!

submitted by /u/Sir-Snickolas

[link] [comments] -

🔗 Kagi release notes Feb 26th, 2026 - Smoothing the edges rss

Kagi Search

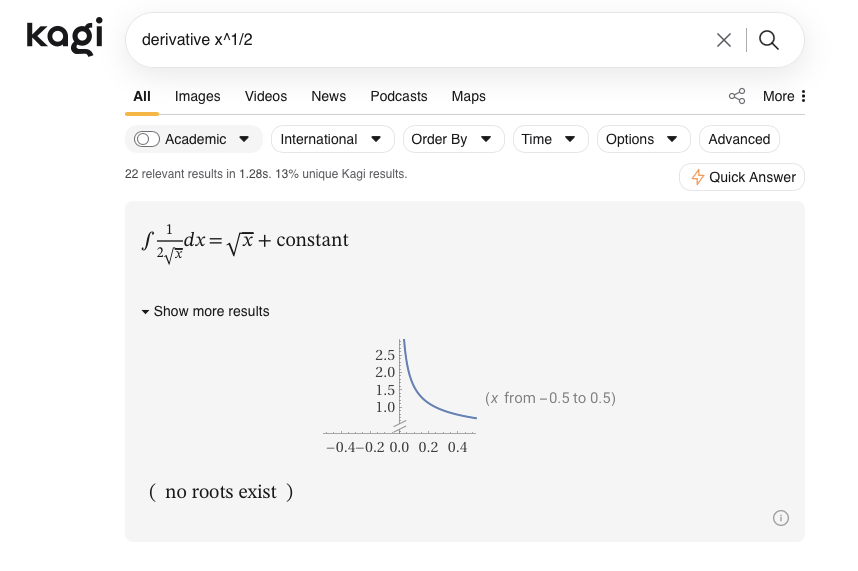

Wolfram|Alpha widget supercharged

We're introducing a new and improved Wolfram|Alpha widget with support for rich equations, plots, better region-dependent queries, and more!

Other improvements and bug fixes

- Kagi Privacy Pass extension conflicts with Kagi Search extension in Firefox, breaking login token recognition in private browsing windows. #6432 @stone. This was a bug in Firefox - thank you to Mozilla for the fix!

- The after-login redirect doesn't work for maps or assistant #8407 @Boomkop3

- !hn bang does not use correct URL #8534 @davej

- Emoji search: Japanese symbols should come up when searching for themselves #9823 @karol

- Not possible to block TLD in personalised results #7104 @MrMoment

- Search box submits incomplete text #9836 @ssg

- Translation won't trigger in search until I reload the page #8409 @Gamesnic

- Content appears behind Dynamic Island on landscape iOS #9772 @ohnojono

- Can’t add a team member from iOS Safari #9003 @pbronez

- Kagi Wolfram answer doesn't match direct Wolfram query #9866 @RonanCJ

- Reverse image search comes up with empty spots #8666 @Boomkop3

- Opening search results in new tab with Vimium shortcuts doesn't work when authenticating via Privacy Pass #9894 @kbkle

- Image search directly from regular search bar #9889 @Boomkop3

- "Weather Saturday" returns for location Saturdaygua instead of weather on saturday #2388 @kevin51jiang

- Searching

Pseudo Codegives(data not available)from Kagi Knowledge #9922 @xjc - "Interesting Finds" does not respect filter rules #8578 @dabluecaboose

- Search snap: Reddit - @r returns little to no results on iOS due to

old.reddit.com#9582 @owl - Allow setting open_snap_domain for custom bangs #9901 @shorden

- Low quality translated Reddit results #5212 @bram

- Click on "More Results" loses the focus #5736 @expurple

PS, we've started publishing results for your SlopStop reports -- see them here. More details in the upcoming changelog.

Kagi Assistant

- Kagi Assistant - Ki Model - Toggle detailed search results broken with a lot of searches #7880 @Elias

- Assistant turns email address like text into mailto in codeblocks #9843 @Numerlor

- Slash-commands being sent to model along with system prompt #9852 @igakagi

- Ki can't access uploaded images from Python #7376 @fxgn

Kagi Maps

- Kagi maps "No POI found matching the query" #9799 @Jobby

- Maps sends double-URL encoded string to images #9698 @gdfgfasf

- Inconsistent Display of Postal Codes #9711 @iamjameswalters

- Maps Search Not Finding Some Places On First Try #9637 @Gredharm

- Searching "Chagos Islands" (or other locations w/o POI data) fails; doesn't fall-back to entry w/ valid POI data #9888 @Cajunvoodoo

Kagi Translate

- Translate Document - "Upgrade to premium" / inconsistent limits #9811 @widow5131

- Kagi translate mixup: Swiss High German vs. Swiss German #9827 @kagiiskey

- I'm interested in integrating Kagi Translate with Anki Flashcards #9750 @johnsturgeon

- Ability to set the default translation quality #9802 @PetrIako

- Translating input to same language: English->English #9690 @Cyb3rKo

- Translate document - premium not working #9879 @widow5131

- Fix needed for Korean word order of "total" count #9718 @Hanbyeol

- Wrong interface language in Kagi Translate #9880 @jstolarek

- Kagi Translate Firefox extension: incomplete translation on some sites #9862 @exzombie

- When I type a long sentence, it freezes and I can't scroll. #9940 @ZK

Kagi Translate - iOS and Android apps

- Fix needed for Korean word order of "total" count #9718 @Hanbyeol

- Make “Translate with Kagi” appear directly in Android text selection menu #9801 @Matou

- Added 'email' writing style for proofreading

- Added setting to toggle haptics ON/OFF

- Fixed UI issue on Android where certain elements were being drawn under system bars

Post of the week

Here is this week's featured social media mention:

Don't forget to follow us and tag us in your comments, we love hearing from you!

Kagi Specials

Kagi is happy to be part of the privacy alliance with Windscribe, a feature-rich VPN with built-in ad and malware blocking and audited no-logs policy.

Through this partnership via Kagi Specials, Kagi members receive a 3-month Windscribe Pro trial, then lock in the Pro plan at just $49/yr for life. In turn, Windscribe members get 3 months of Kagi's Professional plan.

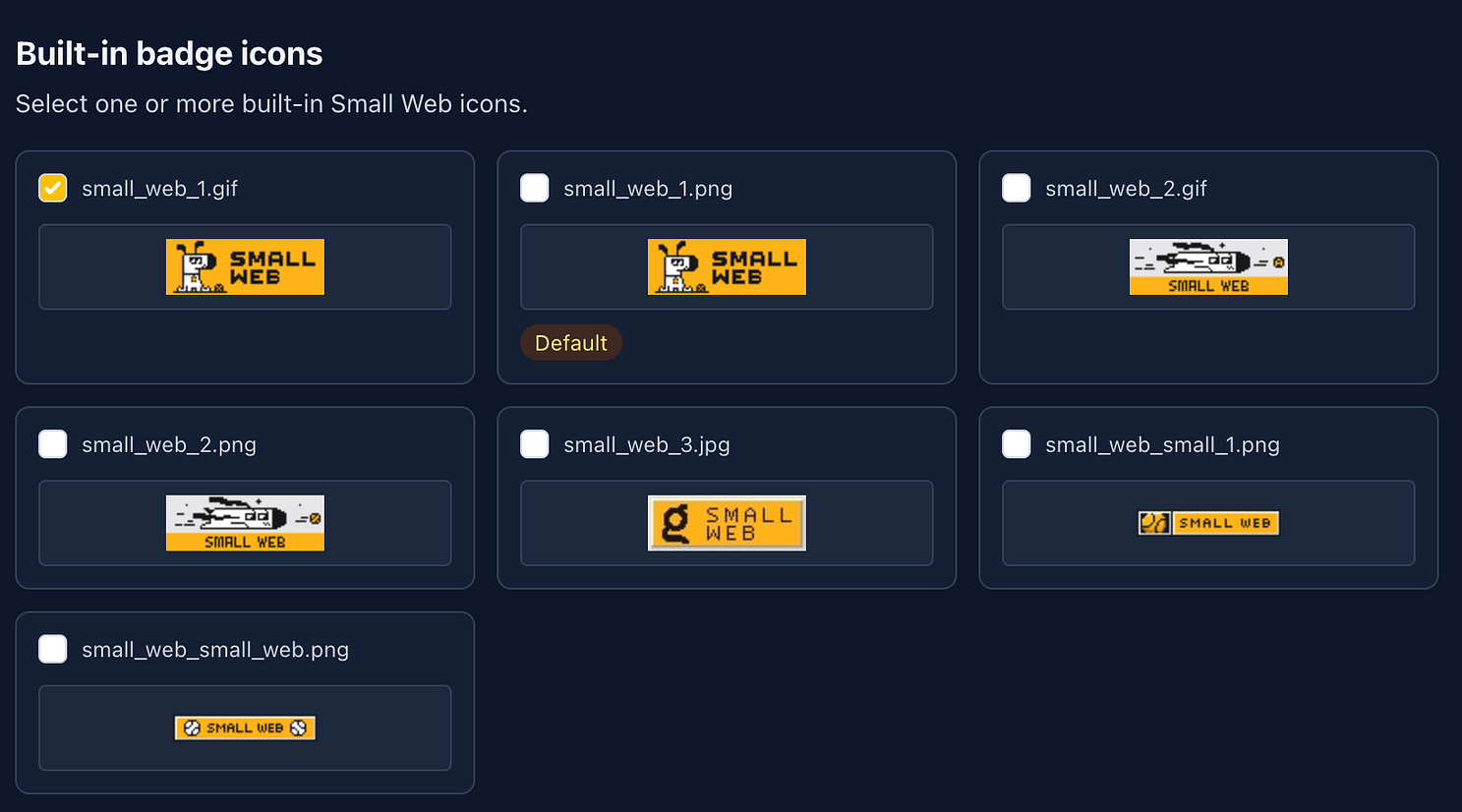

Community creations

If you're using Scribbles to run your blog, you can now add Small Web badges directly to your blog footer, just head to the new "Small Web" section in your blog settings:

Kagi on TV!

Kagi was prominently featured as a private alternative to Google on KTLA 5 News, including an interview with Kagi's very own John Bardinelli, who recently joined the team as our Growth Manager.

-

🔗 r/reverseengineering From DDS Packets to Robot Shells: Two RCEs in Unitree Robots (CVE-2026-27509 & CVE-2026-27510) rss

submitted by /u/WiseTuna

[link] [comments] -

🔗 sacha chua :: living an awesome life Emacs completion and handling accented characters with orderless rss

I like using the orderless completion package for Emacs because it allows me to specify different parts of a completion candidate than any order I want. Because I'm learning French, I want commands like

consult-line(which uses minibuffer completion) andcompletion-at-point(which uses in-buffer completion) to also match candidates where the words might have accented characters. For example, instead of having to type "utilisé" with the accented é, I want to type "utilise" and have it match both "utilise" and "utilisé".(defvar my-orderless-accent-replacements '(("a" . "[aàáâãäå]") ("e" . "[eèéêë]") ("i" . "[iìíîï]") ("o" . "[oòóôõöœ]") ("u" . "[uùúûü]") ("c" . "[cç]") ("n" . "[nñ]"))) ; in case anyone needs ñ for Spanish (defun my-orderless-accent-dispatch (pattern &rest _) (seq-reduce (lambda (prev val) (replace-regexp-in-string (car val) (cdr val) prev)) my-orderless-accent-replacements pattern)) (use-package orderless :custom (completion-styles '(orderless basic)) (completion-category-overrides '((file (styles basic partial-completion)))) (orderless-style-dispatchers '(my-orderless-accent-dispatch orderless-affix-dispatch)))

Figure 1: Screenshot of consult-line showing matching against accented characters

Figure 2: Screenshot of completion-at-point matching "fev" with "février" This is an entry for Emacs Carnival February 2026: Completion.

This is part of my Emacs configuration.You can comment on Mastodon or e-mail me at sacha@sachachua.com.

-

🔗 sacha chua :: living an awesome life IndieWeb Carnival February 2026: Intersecting interests rss

In EnglishThis month, the theme for the IndieWeb Carnival is "Converging Interests." It might actually be easier to list which of my interests don't converge. My interests often overlap. I'll start with a description of my main interests and how they're linked.

Programming is generally useful. I'm particularly interested in automation and cognitive and physical aids like voice interfaces. I love Emacs. It's ostensibly a text editor, but I've tinkered with it to such an extent that I use it for almost everything: managing my notes and tasks, of course, but even recording and editing audio files and organizing my drawings.

Writing helps me think, remember, and share. Org Mode in Emacs allows me to use the technique of literate programming, which combines explaining and coding. Some ideas are easier to think about and express through drawing, which allows me to explore them non-linearly. My drawings apply to all my interests, such as parenting, technology, learning, and planning. Sketchnoting is a great way to learn many things, share my notes, and remember specific moments. For example, my daughter is eager to finish a visual summary we developed together, which was possible because I had written many notes in the web journal I developed and in my French journal.

I've been learning French for the past 4 months, and that also touches various aspects of my daily life. I help my daughter with school, I try to use AI, I tinker with my tools, I watch shows, and I look up words related to my interests. For instance, I updated my handwriting font to include accented letters. This combined drawing, programming, and naturally, learning French. I also modified my writing environment in Emacs to look up words in the dictionary and display AI feedback. I particularly enjoy exploring learning techniques with my daughter, such as flashcards and stories following the principle of comprehensible input. Which methods are effective against which challenges, and how can we make the most of available technology? What we learn will help us across all subjects.

Similarly, learning the piano helps me appreciate the challenge and pleasure of making progress. It's also a good way to help my daughter learn it as well.

Since my life is filled with intertwining interests, it is important to manage my attention despite many distracting temptations, such as programming new tools. I might start a task and then find myself doing something completely different after a series of small, totally logical, steps. You know how it goes—one thing leads to another. So I have to write my notes as I go. There is no rush and few of my tasks are urgent, so when I lose my train of thought, I can laugh and look for it again. If I write and share these notes, someone might find them even years later and remind me of them. It is very difficult to choose a moment to stop exploring and to publish my notes. The temptation is always to keep following a new idea.

Fortunately, the cumulative effect of hobbies that complement each other encourages me to grow, and when I am blocked in one direction, one or two other paths usually open up. Speaking of directions, I find it difficult to write when I want to introduce two or more simultaneous streams of ideas because writing is so linear. Still, it's better to write even if it's a bit disjointed.

I think speech recognition helps me capture more ideas, and I'm looking forward to how advances in technology can help me make them happen. I can also get better by learning and linking new curiosities to my other curiosities. I look forward to seeing what kinds of things are possible.

Although I have several hours of freedom now that my daughter can do many things herself, there's always more that I want to learn. Intertwined hobbies thrive, while isolated hobbies are forgotten. For example, I no longer play Stardew Valley since my daughter doesn't play it anymore. It’s a fun game, but if I'm choosing what to spend my time on, I prefer activities that serve multiple goals goals simultaneously. The garden of my interests is not formal and orderly, but rather natural and tangled.

My daughter also has many interests. One year she was interested in Rubik's Cubes and other puzzles; this year she's learning everything about Pokémon. The transience of her interests doesn't bother me. It all combines in unexpected ways. It will be interesting to see how she grows, and to see how I'll grow too.

Thanks to Zachary Kai for hosting the IndieWeb Carnival this month!

En françaisCe mois-ci, le thème du Carnaval IndieWeb est « Intérêts convergents. » C'est peut-être plus facile de lister lesquels de mes centres d'intérêt ne sont pas convergents. Mes centres d'intérêt se recoupent souvent. Je vais commencer par une description de mes premiers intérêts et des façons dont ils sont liés.

La programmation est généralement utile. Je suis particulièrement intéressée par l'automatisation et les aides cognitives et physiques comme l'interface vocale. J'adore Emacs, qui est un éditeur de texte, mais je le bricole à tel point que je l'utilise pour presque tout : gérer mes notes et mes tâches, bien sûr, mais même enregistrer et éditer des fichiers audio et organiser mes dessins.

L'écriture m'aide à penser, à me remémorer et à partager. Org Mode sous Emacs me permet d'utiliser la technique de « programmation lettrée », qui est la combinaison de l'explication et de la programmation. Quelques idées sont plus faciles à penser et à exprimer par le dessin, lequel me permet de les explorer non linéairement. Mes dessins s'appliquent aussi à tous mes centres d'intérêt, comme la parentalité, la technologie, l'apprentissage et la planification. Le sketchnoting est une bonne manière d'apprendre beaucoup de choses, de partager mes notes et de me souvenir de certains moments. Par exemple, ma fille a hâte de finir une synthèse visuelle que nous avons élaborée ensemble, et qui est possible parce que j'avais écrit beaucoup de notes dans le journal web que j'avais développé et dans mon journal en français.

L'apprentissage du français depuis 4 mois touche aussi divers aspects de ma vie quotidienne. J'aide ma fille à l'école, j'essaie d'utiliser l'IA, je bricole mes outils, je regarde des émissions, je cherche des mots pour mes centres d'intérêt. Par exemple, j'ai mis à jour la police de caractères de mon écriture pour inclure les lettres accentuées. Cela a associé le dessin, la programmation, et naturellement l'apprentissage du français. J'ai aussi modifié mon environnement d'écriture sous Emacs pour rechercher les mots dans le dictionnaire et pour afficher les commentaires de l'IA. J'aime particulièrement explorer des techniques d'apprentissage avec ma fille comme les cartes mémoire et les histoires qui suivent le principe de l'apport compréhensible. Quelles méthodes sont efficaces contre quels défis, et comment nous pouvons tirer le meilleur parti des technologies disponibles ? Ce que nous apprenons nous servira bien dans tous les sujets.

De la même manière, l'apprentissage du piano m'aide à apprécier le défi et le plaisir de progresser. Une autre raison de le faire est qu'il aide ma fille à l'apprendre aussi.

Comme ma vie est remplie d'intérêts qui s'entrelacent, c'est important de gérer mon attention face à plusieurs tentations de s'éparpiller, comme la programmation de nouvelles automatisations. Je commence peut-être une tâche et je me retrouve ensuite à faire une tâche complètement différente après une suite d'étapes logiques. On sait ce que c'est, de fil en aiguille. Donc je dois écrire mes notes au fur et à mesure. Rien ne me presse et peu de mes tâches sont urgentes, donc quand je perds le fil de mes pensées, je peux rire et le retrouver. Si j'écris et que je partage ces notes, quelqu'un peut les trouver même après plusieurs années et me les rappeler. C'est très difficile de choisir un moment où j'arrête d'explorer et où je publie mes notes. La tentation est toujours de continuer à suivre une nouvelle idée.

Heureusement, l'effet cumulatif de loisirs qui se complètent m'encourage à grandir, et quand je suis bloquée dans une direction, une ou deux autres pistes se sont ouvertes. En parlant de directions, je trouve que c'est difficile d'écrire quand je veux introduire deux ou plusieurs suites d'idées simultanées, à cause de la linéarité de l'écriture. De toute façon, c'est mieux d'écrire même si c'est un peu décousu.

Je pense que la reconnaissance vocale m'aide à saisir plus d'idées et les progrès technologiques m'aident à les exécuter. Je vais aussi m'améliorer en apprenant et en reliant de nouvelles curiosités à mes autres curiosités. J'ai hâte de voir quelles sortes de choses sont possibles.

Bien que j'aie plusieurs heures de liberté maintenant que ma fille est capable de faire beaucoup de choses elle-même, il y a toujours plus de choses que je veux apprendre. Les loisirs entrelacés se développent, tandis que les loisirs isolés sont oubliés. Par exemple, je ne joue plus à Stardew Valley maintenant que ma fille n'y joue plus. C'est un jeu amusant, mais si je peux choisir un passe-temps, j'en préfère un qui serve des objectifs multiples simultanés. Le jardin de mes intérêts n'est pas formel et ordonné, mais plutôt naturel et entremêlé.

Ma fille a aussi beaucoup de centres d'intérêt. Une année elle s'est intéressée au Cube de Rubik et aux autres casse-têtes, une autre année elle apprenait tout sur Pokémon. Ça ne me dérange pas, tout se combine de façons inattendues. Ce sera intéressant de voir comment elle grandira, et moi aussi.

Merci à Zachary Kai d'accueillir le Carnaval IndieWeb ce mois-ci !

You can e-mail me at sacha@sachachua.com.

-

🔗 r/Harrogate Crime and safety in this area of Harrogate rss

| I’m looking at buying a house in this area of Harrogate. It is not a cheap place at all but it’s a nice house and the road itself seems nice However I am now concerned about the safety and levels of crime in the area. Not on the road I am looking at in particular but on the approach and adjacent roads that we’d have to walk through to get to the town. Please can people let me know your experiences of this area - good and bad? I’d be most interested in hearing from residents. I have previously lived in London (Golders Green, although the Hampstead Garden Suburb), Newcastle City Centre as a student and for work, and I’m used to Middlesbrough - somewhere close to where I grew up and so spent a lot of time there. I’m looking at this as a place where I can start a family potentially and spend a long period of time. I like being close to amenities but at the end of the day safety and feeling comfortable in your area has to be the number one priority. submitted by /u/DoughnutHairy9943

| I’m looking at buying a house in this area of Harrogate. It is not a cheap place at all but it’s a nice house and the road itself seems nice However I am now concerned about the safety and levels of crime in the area. Not on the road I am looking at in particular but on the approach and adjacent roads that we’d have to walk through to get to the town. Please can people let me know your experiences of this area - good and bad? I’d be most interested in hearing from residents. I have previously lived in London (Golders Green, although the Hampstead Garden Suburb), Newcastle City Centre as a student and for work, and I’m used to Middlesbrough - somewhere close to where I grew up and so spent a lot of time there. I’m looking at this as a place where I can start a family potentially and spend a long period of time. I like being close to amenities but at the end of the day safety and feeling comfortable in your area has to be the number one priority. submitted by /u/DoughnutHairy9943

[link] [comments]

---|--- -

🔗 sacha chua :: living an awesome life Sorting completion candidates, such as sorting Org headings by level rss

: Made the code even neater with

:key, included the old code as wellAt this week's Emacs Berlin meetup, someone wanted to know how to change the order of completion candidates. Specifically, they wanted to list the top level Org Mode headings before the second level headings and so on. They were using org-ql to navigate Org headings, but since org-ql sorts its candidates by the number of matches according to the code in the

org-ql-completing-readfunction, I wasn't quite sure how to get it to do what they wanted. (And I realized my org-ql setup was broken, so I couldn't fiddle with it live. Edit: Turns out I needed to update the peg package) Instead, I showed folksconsult-org-headingwhich is part of the Consult package, which I like to use to jump around the headings in a single Org file. It's a short function that's easy to use as a starting point for something custom.Here's some code that allows you to use

consult-org-headingto jump to an Org heading in the current file with completions sorted by level.(with-eval-after-load 'consult-org (advice-add #'consult-org--headings :filter-return (lambda (candidates) (sort candidates :key (lambda (o) (car (get-text-property 0 'consult-org--heading o)))))))

Figure 1: Screenshot showing where the candidates transition from top-level headings to second-level headings My previous approach defined a different function based on

consult-org-heading, but using the advice feels a little cleaner because it will also make it work for any other function that usesconsult-org--headings. I've included the old code in case you're curious. Here, we don't modify the function's behaviour using advice, we just make a new function (my-consult-org-heading) that calls another function that processes the results a little (my-consult-org--headings).Old code, if you're curious(defun my-consult-org--headings (prefix match scope &rest skip) (let ((candidates (consult-org--headings prefix match scope))) (sort candidates :lessp (lambda (a b) (let ((level-a (car (get-text-property 0 'consult-org--heading a))) (level-b (car (get-text-property 0 'consult-org--heading b)))) (cond ((< level-a level-b) t) ((< level-b level-a) nil) ((string< a b) t) ((string< b a) nil))))))) (defun my-consult-org-heading (&optional match scope) "Jump to an Org heading. MATCH and SCOPE are as in `org-map-entries' and determine which entries are offered. By default, all entries of the current buffer are offered." (interactive (unless (derived-mode-p #'org-mode) (user-error "Must be called from an Org buffer"))) (let ((prefix (not (memq scope '(nil tree region region-start-level file))))) (consult--read (consult--slow-operation "Collecting headings..." (or (my-consult-org--headings prefix match scope) (user-error "No headings"))) :prompt "Go to heading: " :category 'org-heading :sort nil :require-match t :history '(:input consult-org--history) :narrow (consult-org--narrow) :state (consult--jump-state) :annotate #'consult-org--annotate :group (and prefix #'consult-org--group) :lookup (apply-partially #'consult--lookup-prop 'org-marker))))I also wanted to get this to work for

C-u org-refile, which usesorg-refile-get-location. This is a little trickier because the table of completion candidates is a list of cons cells that don't store the level, and it doesn't pass the metadata tocompleting-readto tell it not to re-sort the results. We'll just fake it by counting the number of "/", which is the path separator used iforg-outline-path-complete-in-stepsis set tonil.(with-eval-after-load 'org (advice-add 'org-refile-get-location :around (lambda (fn &rest args) (let ((completion-extra-properties '(:display-sort-function (lambda (candidates) (sort candidates :key (lambda (s) (length (split-string s "/")))))))) (apply fn args)))))

Figure 2: Screenshot of sorted refile entries In general, if you would like completion candidates to be in a certain order, you can specify

display-sort-functioneither by callingcompleting-readwith a collection that's a lambda function instead of a table of completion candidates, or by overriding it withcompletion-category-overridesif there's a category you can use orcompletion-extra-propertiesif not.Here's a short example of passing a lambda to a completion function (thanks to Manuel Uberti):

(defun mu-date-at-point (date) "Insert current DATE at point via `completing-read'." (interactive (let* ((formats '("%Y%m%d" "%F" "%Y%m%d%H%M" "%Y-%m-%dT%T")) (vals (mapcar #'format-time-string formats)) (opts (lambda (string pred action) (if (eq action 'metadata) '(metadata (display-sort-function . identity)) (complete-with-action action vals string pred))))) (list (completing-read "Insert date: " opts nil t)))) (insert date))If you use

consult--readfrom the Consult completion framework, there is a:sortproperty that you can set to either nil or your own function.This entry is part of the Emacs Carnival for Feb 2026: Completion.

This is part of my Emacs configuration.You can comment on Mastodon or e-mail me at sacha@sachachua.com.

-

🔗 r/Leeds Update on my ridiculous connection rss

I’m the idiot who booked this connection between the coach and train station. I With a 3 minute delay from Preston, I am delighted to say I made it on to the coach which I am currently writing this from. Thank who to ever who game advice on the best way to execute this.

submitted by /u/Glittering_Yam_5613

[link] [comments] -

🔗 r/york Latest engineering improvement works for TRU between Leeds & York (via Crossgates) plus effecting trains between Leeds to Selby/Hull rss

| submitted by /u/CaptainYorkie1

| submitted by /u/CaptainYorkie1

[link] [comments]

---|--- -

🔗 r/Yorkshire Latest engineering improvement works for TRU between Leeds & York (via Crossgates) plus effecting trains between Leeds to Selby/Hull rss

| submitted by /u/CaptainYorkie1

| submitted by /u/CaptainYorkie1

[link] [comments]

---|--- -

🔗 r/Leeds Latest engineering improvement works for TRU between Leeds & York (via Crossgates) plus effecting trains between Leeds to Selby/Hull rss

submitted by /u/CaptainYorkie1

[link] [comments] -

🔗 r/LocalLLaMA American closed models vs Chinese open models is becoming a problem. rss

The work I do involves customers that are sensitive to nation state politics. We cannot and do not use cloud API services for AI because the data must not leak. Ever. As a result we use open models in closed environments.

The problem is that my customers don’t want Chinese models. “National security risk”.

But the only recent semi-capable model we have from the US is gpt-oss-120b, which is far behind modern LLMs like GLM, MiniMax, etc.

So we are in a bind: use an older, less capable model and slowly fall further and further behind the curve, or… what?

I suspect this is why Hegseth is pressuring Anthropic: the DoD needs offline AI for awful purposes and wants Anthropic to give it to them.

But what do we do? Tell the customers we’re switching to Chinese models because the American models are locked away behind paywalls, logging, and training data repositories? Lobby for OpenAI to do us another favor and release another open weights model? We certainly cannot just secretly use Chinese models, but the American ones are soon going to be irrelevant. We’re in a bind.

~~Our one glimmer of hope is StepFun-AI out of South Korea. Maybe they’ll save Americans from themselves.~~ I stand corrected: they’re in Shanghai.

Cohere are in Canada and may be a solid option. Or maybe someone can just torrent Opus once the Pentagon force Anthropic to hand it over…

submitted by /u/JockY

[link] [comments] -

🔗 r/wiesbaden Dobermann mit Biss-vergangenheit sucht dringend ein Erfahrenes Zuhause rss

submitted by /u/_thatkitten

[link] [comments] -

🔗 r/reverseengineering Reverse Engineering Garmin Watch Applications with Ghidra rss

submitted by /u/anvilventures

[link] [comments] -

🔗 r/LocalLLaMA Qwen3.5-35B-A3B Q4 Quantization Comparison rss

| This is a Q4 quantization sweep across all major community quants of Qwen3.5-35B-A3B, comparing faithfulness to the BF16 baseline across different quantizers and recipes. The goal is to give people a data-driven basis for picking a file rather than just grabbing whatever is available. For the uninitiated: KLD (KL Divergence): "Faithfulness." It shows how much the quantized model's probability distribution drifts from a baseline (the probability distribution of the original weights). Lower = closer. PPL (Perplexity): Used to measure the average uncertainty of the model when predicting the next token. It is derived from the total information loss (Cross Entropy). Lower = more confident. They are correlated. Perplexity measures the total error, KLD measures the relative error (like a routing drift of an MoE model). This relationship helps in determining information loss (or gain when training). Since we are trying to see how much information we've lost and since PPL is noisy as it can get a better score by pure luck, KLD is better as it is not relying on the dataset but on the baseline. If you need the most faithfull quant, pick the one with the lowest KLD.