- ↔

- →

to read (pdf)

- Letting AI Actively Manage Its Own Context | 明天的乌云

- Garden Offices for Sale UK - Portable Space

- Cord: Coordinating Trees of AI Agents | June Kim

- Style tips for less experienced developers coding with AI · honnibal.dev

- Haskell for all: Beyond agentic coding

- March 06, 2026

-

🔗 r/Yorkshire Join me on a hike through a hidden pocket of beauty in West Yorkshire. From Ferrybridge to Brotherton, Fairburn, Ledsham and Ledston. Let me know your thoughts 🙂 rss

-

🔗 r/Leeds Has anyone else had the pleasure of sharing a bus with the singing man?? rss

submitted by /u/throwawayjinkie

[link] [comments] -

🔗 r/Yorkshire Barn venues rss

Hi all, looking for venues, preferably barn or with a barn look that you can hire for the day ? Doesn’t need to be anything fancy and it doesn’t need to include too much, just need somewhere to host a celebration. Thanks !

submitted by /u/AngusWtf

[link] [comments] -

🔗 r/reverseengineering Reverse-engineered the WiFi transfer protocol for HeyCyan smart glasses (BLE + USR-W630 WiFi module) — first iOS implementation rss

submitted by /u/CockroachLow3274

[link] [comments] -

🔗 r/wiesbaden Schwarz-weiß Fotos entwickeln lassen rss

Weiß jemand wo ich einen schwarz-weiß Film (Kentmere Pan 400) aus einer analogen Filmkamera entwickeln lassen kann? Rossmann&Co. kenne ich schon. Suche etwas das nicht so teuer ist und trotzem gute Fotos bei rauskommen. Jemand hat mir mal Foto Express in FFM empfohlen. Suche hier was vergleichbares. Danke

submitted by /u/DocterSkinny

[link] [comments] -

🔗 @binaryninja@infosec.exchange If you are at RE//verse, you can find the Binary Ninja Booth in the RE//fresh mastodon

If you are at RE//verse, you can find the Binary Ninja Booth in the RE//fresh lounge! We will be running live demos and handing out Binja swag. Come say hey and sign the our banner! Not in Orlando this week? We will be streaming at 3 PM ET live from RE//verse: https://youtube.com/live/bW- oz1UVkCM?feature=share

-

🔗 facebookresearch/faiss v1.14.1 release

[1.14.0] - 2026-03-06

Added

6cf2c63Update SVS to v0.2.0 and install from conda forge (#4860)1cb3d46feat(svs): LeanVec OOD support (#4773)5cf2c42Expose IndexBinaryFlat to the C API. (#4834)db9ba35add hadamard transformation as an index for IVF (#4856)

Changed

c8579deTry to force relative import statement (#4878)471ddadIncrement to next release, v1.14.1 (#4861)c90c9dcUpdate python to include 3.13 and 3.14 (#4859)8af77feSIMD-optimize multi-bit RaBitQ inner product (#4850)ccc934fScalarQuantizer: split SIMD specializations into per-SIMD TUs + DD dispatch (#4839)

Fixed

5622e93(HEAD -> main, origin/main, origin/HEAD) v1.14.1 Fix build-release (#4876)8431e04Replace squared-distance IP with direct dot-product in multi-bit RaBitQ (#4877)28f79bdFix SWIG 4.4 multi-phase init: replace import_array() with import_array1(-1) (#4846)

Deprecated

-

🔗 r/Yorkshire Transit Options in Yorkshire Dales Park? rss

Hi friends, I'll be in Hawes this summer and depend mostly on Google maps to get me around via public transit. I'd like to go eastward for a few days (e.g., Ripon, Whitby), but Google shows only rail options that connect through York (e.g., Hawes -> York -> Whitby). Curious if there are additional transit options that offer more direct routes westward or eastward. Appreciate it!

submitted by /u/minpaul

[link] [comments] -

🔗 News Minimalist 🐢 Weight loss drugs fight multiple addictions + 12 more stories rss

In the last 4 days ChatGPT read 122438 top news stories. After removing previously covered events, there are 13 articles with a significance score over 5.5.

[6.5] GLP-1 drugs may fight addiction across every major substance, according to a study of 600,000 people —theconversation.com(+30)

A study of 600,000 people found that GLP-1 drugs significantly reduce cravings, overdoses, and deaths across multiple addictions, including opioids and alcohol, marking a potential breakthrough in addiction medicine.

Researchers observed a 50% reduction in substance-related deaths among users already struggling with addiction. The drugs also lowered the risk of developing new dependencies on nicotine and cocaine by roughly 20%, likely by dampening dopamine signaling in the brain’s reward centers.

While not yet approved specifically for addiction, GLP-1 medications are already widely prescribed for diabetes and obesity. Ongoing clinical trials aim to confirm these findings and address questions regarding long-term effectiveness.

[5.8] Iran grants China exclusive passage through the Strait of Hormuz —ndtv.com(+110)

Iran will now permit only Chinese vessels to navigate the Strait of Hormuz, rewarding Beijing's support during the regional conflict and further threatening critical global energy supply chains.

The Islamic Revolutionary Guard Corps claims full control of the chokepoint, warning that non-Chinese ships face missile or drone strikes. This blockade impacts regional neighbors like Qatar and the UAE while disrupting twenty percent of the world’s total oil supply transit.

Beijing previously condemned Western military actions against Iran as unacceptable. Meanwhile, the United States government maintains that military escorts may be deployed to prevent domestic inflation and protect the international flow of commerce.

Highly covered news with significance over 5.5

[6.6] Evo 2: An AI model for genome prediction and design across all life — nature.com (+6)

[6.1] France expands nuclear arsenal and strengthens European defense cooperation — bostonglobe.com (+29)

[5.9] AI blood test detects silent liver disease years before symptoms — sciencedaily.com (+3)

[5.8] Indonesia bans social media for children under 16 — abcnews.com (+45)

[5.7] US forces support Ecuador's fight against drug trafficking organizations — bostonglobe.com (+29)

[5.7] China sets slowest growth target since 1991, focusing on tech and domestic demand — abcnews.com (+49)

[5.5] New study reveals underestimated sea level rise threatens millions more people — abcnews.com (+14)

[5.5] Lawsuit claims Google Gemini AI gave dangerous instructions leading to a man's suicide — time.com (+34)

[5.5] New treatment is reducing seizure frequency in children by 91% — ndtv.com (+11)

[5.8] Japan approves world's first stem cell treatment for Parkinson's and heart failure — nippon.com (+6)

[5.8] BYD introduces new battery technology with over 600 miles of range and rapid charging — fastcompany.com (+3)

Thanks for reading!

— Vadim

You can set up and personalize your own newsletter like this with premium.

-

🔗 r/york York shot on my cheap little point and shoot film camera:) rss

| Some photos I shot a little while back in your beautiful city! submitted by /u/Organic_Repair8717

| Some photos I shot a little while back in your beautiful city! submitted by /u/Organic_Repair8717

[link] [comments]

---|--- -

🔗 r/york Live music? rss

Looking for a few recommendations for live bands/music in the city centre. My girlfriend and I are here for the weekend. Any recommendations are greatly appreciated, thanks 👍

submitted by /u/stayant1

[link] [comments] -

🔗 r/Harrogate Best way to travel to London rss

submitted by /u/Apprehensive_Ring666

[link] [comments] -

🔗 r/Leeds Ex Starbucks, Chapel Allerton, What Next rss

Hello

I see the Ex Starbucks, Chapel Allerton, is under offer. Anybody know who's moving in? Big building to fill.

submitted by /u/renlauo

[link] [comments] -

🔗 r/reverseengineering VirusTotal but free rss

submitted by /u/el_mulon

[link] [comments] -

🔗 r/york Loft conversion recommendations rss

Hiya lovely people of York - happy Friday!

Looking to get our mid terrace house loft converted - we got very stung by a plumber we found through checkatrade and have had problems finding roofers in the past, so the main thing stopping me is worry about getting the wrong people in!

Anyone got recommendations? (Also rough cost if you don't mind sharing) - we're looking to go as simple as possible, no Dormer or bathroom !

submitted by /u/AutumnDream1ng

[link] [comments] -

🔗 r/reverseengineering My journey through Reverse Engineering SynthID rss

submitted by /u/Available-Deer1723

[link] [comments] -

🔗 r/reverseengineering My journey through Reverse Engineering SynthID rss

submitted by /u/MissAppleby

[link] [comments] -

🔗 r/Yorkshire Fountains Abbey, Ripon, Yorkshire rss

| submitted by /u/mdbeckwith

| submitted by /u/mdbeckwith

[link] [comments]

---|--- -

🔗 jank blog jank is off to a great start in 2026 rss

Hey folks! We're two months into the year and I'd like to cover all of the progress that's been made on jank so far. Before I do that, I want to say thank you to all of my Github sponsors, as well as Clojurists Together for sponsoring this whole year of jank's development!

-

- March 05, 2026

-

🔗 IDA Plugin Updates IDA Plugin Updates on 2026-03-05 rss

IDA Plugin Updates on 2026-03-05

New Releases:

Activity:

- capa

- ghidra

- a7a795b3: Merge remote-tracking branch 'origin/Ghidra_12.1'

- 4e4674be: Merge branch 'GP-6537_ryanmkurtz_PR-1905_mduggan_phar_lap_ne_support'

- 0351dc99: GP-6537: Certify

- 6fa0ddbc: Support large (>2^16) offset to exe file NE header

- f466bb00: Merge remote-tracking branch 'origin/Ghidra_12.1'

- d374989a: Merge remote-tracking branch 'origin/GP-6536_ghidragon_null_ptr_excep…

- 5e46aa4e: Merge remote-tracking branch 'origin/GP-0-dragonmachre-enum-test-fix'…

- 9d55f0d8: Test fix

- ida-edit-function-prototype

- ida-pro-mcp

- b160449c: Merge pull request #252 from baoan7090/baoan7090-patch-1

- 4d613d0a: Merge pull request #262 from haosenwang1018/fix/bare-except

- b0844afa: Merge pull request #257 from withzombies/many-to-many-session-management

- 5aae5542: fix: prevent SIGPIPE crash and port collision with multiple IDA insta…

- 5fb925bd: fix: auto-increment port for multiple IDA instances

- 20f28764: fix: ignore SIGPIPE to prevent IDA crash on client disconnect

- idamagicstrings

- idasql

- msc-thesis-LLMs-to-rank-decompilers

- Rikugan

- 13c59b49: security: add prompt injection mitigation and harden approval gates

- 80bebbb4: update readme

- 4b244a89: update readme

- b80a9f73: docs(webpage): sync docs with current codebase and add llms.txt

- adfc54df: refactor: rewrite MCP client using official mcp SDK

- 4c9f2eb8: fix readme

- 2573664e: adds gif

- 3f7d8725: feat: expanded test suite and misc fixes

- 9ce5f0a0: Merge branch 'desloppify/code-health'

- 629f7298: refactor: code health improvements (desloppify 37→81)

- f3dbff97: Merge branch 'main' of github.com:buzzer-re/Rikugan

- 474bbff9: adds cff example gif

- 25072ded: feat: IL analysis/transform tools, deobfuscation skill, and fixes

- 39f51312: docs: fix platform paths, add llms.txt, Architecture button, il_problem

- sighthouse

- 6fa33f86: First release \o/

- zenyard-ida-public

-

🔗 r/york No Three data signal in Goodramgate rss

Is anyone else experiencing the Three mobile data drops out in Goodramgate/Kings Sq? There’s a full house of signal but no data connection. Eg Streaming music stops playing as I walk through the area. It’s been happening for a few weeks now.

submitted by /u/dawnriser

[link] [comments] -

🔗 r/york recently moved to york and looking to make new mates (23m) rss

moved to foss islands 6 months ago but i’m struggling to meet new people here. i work for the NHS doing shifts so i struggle to attend anything regularly. i enjoy swimming, running and good old pints🍻

any local casual social groups/sports groups? i’ve tried meetup.com and others but struggling to find many groups - any recommendations welcome :)

submitted by /u/Internal-Bet4689

[link] [comments] -

🔗 r/york recently moved to york and looking to make new mates (23m) rss

moved to foss islands 6 months ago but i’m struggling to meet new people here. i work for the NHS doing shifts so i struggle to attend anything regularly. i enjoy swimming, running and good old pints🍻

any local casual social groups/sports groups? i’ve tried meetup.com and others but struggling to find many groups - any recommendations welcome :)

submitted by /u/Internal-Bet4689

[link] [comments] -

🔗 r/wiesbaden Bestes Eis oder Kuchen für Samstag rss

Guude :) Ich bin am Samstag in Wiesbaden und das Wetter soll ja ganz gut werden. Was wäre so der beste Eisladen in Wiesbaden? Alternativ gerne auch ein Café mit guter Kuchenauswahl/gerne traditioneller :) Danke!!

submitted by /u/LastCauliflower3842

[link] [comments] -

🔗 r/Leeds Anywhere that serves pints in ice cold glasses? rss

One day of sun and I’m craving it

submitted by /u/augustbecchio

[link] [comments] -

🔗 r/wiesbaden US Car people in Wiesbaden rss

Hi guys,

since the weather is great and the (early) season is on, I am looking for likeminded people with (old) US Cars - Muscle Cars, Trucks, Jeeps etc. There are some meetings in the area but after visiting some, I feel like a lot of those people are organized in clubs etc and drive like 200km just to show off their new paintjob ;) I am more into tech talks, wrenching, learning and having fun with the cars. And I am not 60 years old.

I own a '93 Corvette myself, have some knowledge of 350 Chevy V8s and cars in general, and would love to meet new interesting people who share this hobby. I'm german but my english is fine. There are a lot of US spec cars in Wiesbaden so I thought I just write in english here. I am living next to Hainerberg and was greeted by a black Challenger today, so this was my sign to give this post a go. Just hit me up and connect.

Cheers!

submitted by /u/randomsubi

[link] [comments] -

🔗 r/york Rowntree Park Tennis Partner rss

Hello. I am looking for a tennis buddy to hit balls with on an evening / sometimes during week with the lighter nights coming. Just joined Rowntree Park tennis yesterday so id like to play here. I'm intermediate/ advanced but I don't really like to play competitively tbh.

submitted by /u/BlueSky86010

[link] [comments] -

🔗 The Pragmatic Engineer The Pulse: Cloudflare rewrites Next.js as AI rewrites commercial open source rss

Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer . This issue is the entire The Pulse issue from the past week, which paying subscribers received seven days ago. This piece generated quite a few comments across subscribers , and so I 'm sharing it more broadly, especially as it raises questions on what is defensible and what is not with open source.

If you 've been forwarded this email, you can subscribe here to get issues like this in your inbox.

Today's issue of The Pulse focuses on a single event because it's a significant one with major potential ripple effects. On Tuesday, Cloudflare shocked the dev world by announcing that they have rewritten Next.js in just one week, with a single developer who used only $1,100 in tokens:

Cloudflare

CTO Dane Knecht on

X

Cloudflare

CTO Dane Knecht on

XThere are several layers to dig into here:

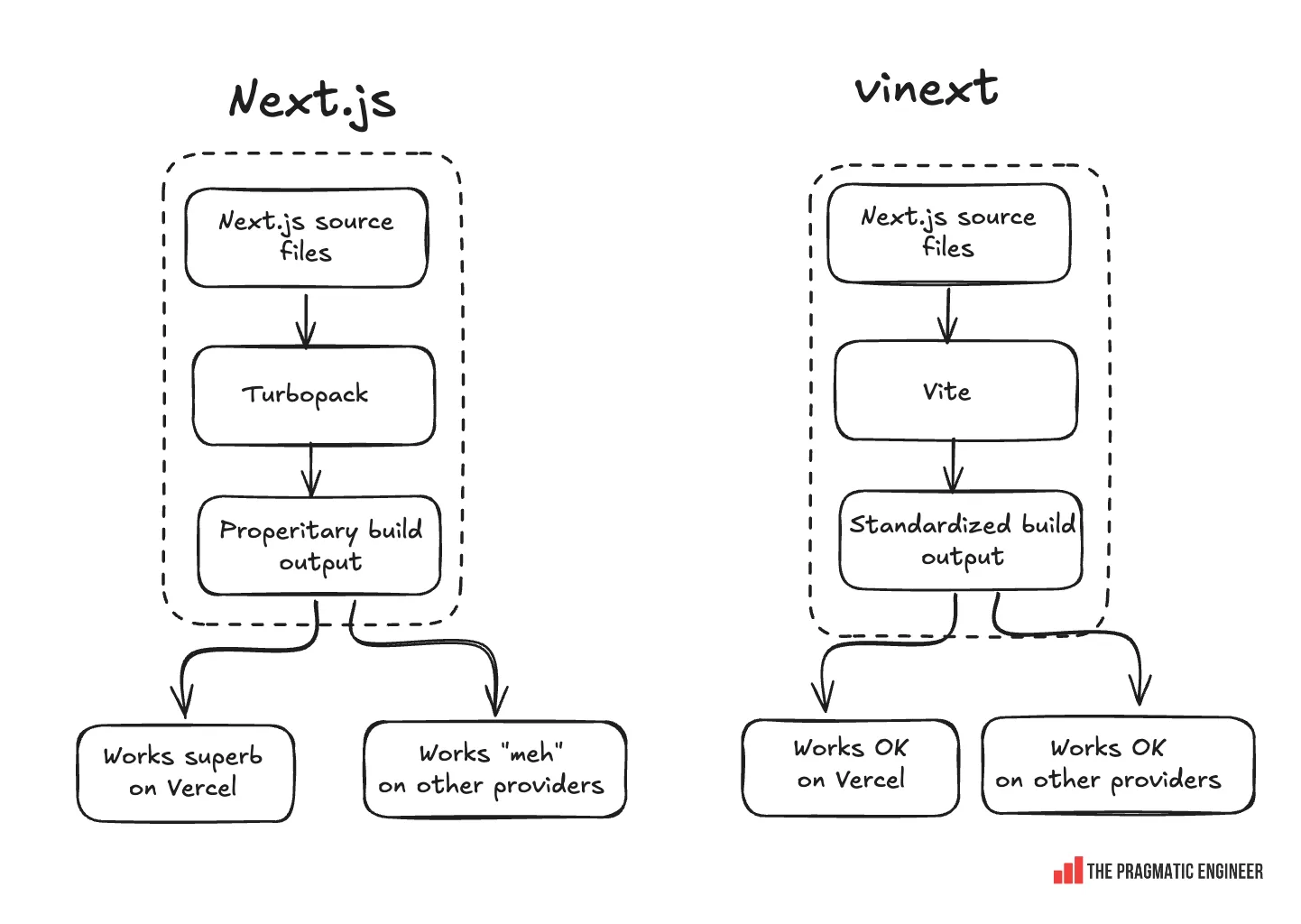

- The Next.js ecosystem: a recap. Close to half of React devs use Next.js, and the best place to deploy Next.js is on Vercel - partly thanks to its proprietary build output.

- What Cloudflare did with Next.js. Replacing the build engine in Next.js with the more standard Vite one, allowing Next.js apps to be easily deployed on Cloudflare.

- AI brings the impossible within reach. What would take years in engineering terms was executed in one week with some tokens.

- " AI slop" still an issue. Contrary to Cloudflare's claims, vinext is not production-ready, and will need plenty of cleanup and auditing to make it on par with Next.js.

1. The Next.js ecosystem: a recap

First, some background. Next.js is the most popular fullstack React framework and around half of all React devs use it, as per recent research such as the 2025 Stack Overflow developer survey. Next.js is an open source project, built and mostly maintained by Vercel, which is the preferred deployment target for Next.js applications for many reasons. One of them is that Next.js is ideal to deploy to Vercel because Next.js applications are built with Vercel's Turbopack build tool. The output of a build is a proprietary format. As Netlify engineer Eduardo Bouças writes:

"The output of a Next.js build has a proprietary and undocumented format that is used in Vercel deployments to provision the infrastructure needed to power the application.

This means that any hosting providers other than Vercel must build on top of undocumented APIs that can introduce unannounced breaking changes in minor or patch releases. (And they have)".

Next.js is an interestingly built project, where everything is open source, and the best place to deploy a Next.js application is on Vercel, as it's optimized to run undocumented build artifacts the most efficiently. This is a smart strategy from Vercel which competitors will dislike, as any hosting provider would prefer Next.js to produce a standard build format. To do this, the build engine, Turbopack, would need to be replaced with something more standard.

Let 's talk about build tools for web development. According to the State of JS 2025 survey, the most popular in the web ecosystem are:

- Vite: the most popular choice for new projects due to its speed and developer experience. Uses projects like esbuild and Rollup under the hood

- Webpack: a legacy tool that's not very performant, but still widely deployed in older projects

- Turbopack: Created by Vercel and optimized for larger Next.js applications. Built in Rust and intended to be more performant

- Bun: a relatively new, all-in-one runtime and bundler. Anthropic acquired the team in December, and some Bun folks are now focused on improving Claude Code's performance.

So, most of the web ecosystem uses Vite as a build tool; Next.js uses Turbopack, and the majority of React applications with a full-stack React framework use Next.js. Basically, most devs using Next.js are likely to use Vite as their build tool.

2. What Cloudflare did with Next.js

Here's a naive idea: what if Next.js used Vite to generate build outputs? In that case, build outputs would be standardized and would run equally well on any cloud provider, as there would be nothing proprietary or undocumented to Vercel.

And this is what Cloudflare did: replace Turbopack with Vite and call the new package 'vinext':

Cloudflare

replaced the Turbopack build dependency with Vite to create vinext

Cloudflare

replaced the Turbopack build dependency with Vite to create vinextBuried midway in the announcement is how this project is experimental and not at all guaranteed to work okay: it's a 'use-at-own-risk' project. Still, the mere fact of this development feels like an earthquake in the tech world because of how it was pulled off.

3. AI brings the impossible within reach

In a blog post announcing the project, Cloudflare claims only one engineer "rebuilt" the whole thing in a way that's trivial to deploy to Cloudflare's own infrastructure, and only cost $1,100 in tokens. From Cloudflare's statement:

"Last week, one engineer and an AI model rebuilt the most popular front-end framework from scratch. The result, vinext (pronounced "vee-next"), is a drop-in replacement for Next.js, built on Vite, that deploys to Cloudflare Workers with a single command. In early benchmarks, it builds production apps up to 4x faster and produces client bundles up to 57% smaller. And we already have customers running it in production.

The whole thing cost about $1,100 in tokens".

What Cloudflare did:

- Took the Next.js public API

- Reimplemented behaviour using Vite

- Created build output whose behaviour matches the "original" Next.js implementation

After 10 years, the core of Next has around 194,000 lines of code (LOC)**. Meanwhile, vinext is about 67,000 lines of code which suggests a much leaner implementation: for example, vinext does not need to support legacy Next APIs, and vinext currently supports 94% of the Next.js API (and it's safe to assume they left complex edge cases in the remaining 6%).

** the Next.js repository is closer to 2M lines of code: 1M is bundled dependencies (eg React bundles, CSS build etc), tests are 308,000 LOC, Turbopack 311,000 LOC.

Pre-AI, this reimplementation would have taken years of engineering time to complete. Doing what Cloudflare did was always possible _ in theory_, but never seemed practical. I mean, why have a team of engineers spend potentially years on generating a standardized build output for Next.js apps? Even if they did, the dev community would have doubts about whether Cloudflare would maintain the project.

This is the thing with forking or rewriting open source projects: a major value proposition for commercial open source is to know that they will be maintained. Vercel has proved it's a reliable custodian of Next.js for the past 10 years. Without AI, it could be assumed that any new reimplementation would eventually run out of steam.

Separately but relatedly, Cloudflare has now proved that the cost of rewriting existing software has become ~100x cheaper, thanks to AI, and this economy is likely to be the case for maintenance, too. Considering how trivial it was to rebuild one of the more complex open source projects, this augers well for it being trivial and much cheaper to maintain in the future. Potentially, Cloudflare no longer needs to budget an engineering team only for maintenance, if a single engineer could maintain the project, part-time!

Cloudflare had a project measured in engineering years, and completed it in one engineering week! It just took a single engineer using OpenCode (open source coding agent), Opus 4.5, and a bunch of tokens, then: 'boom ', vinext was born.

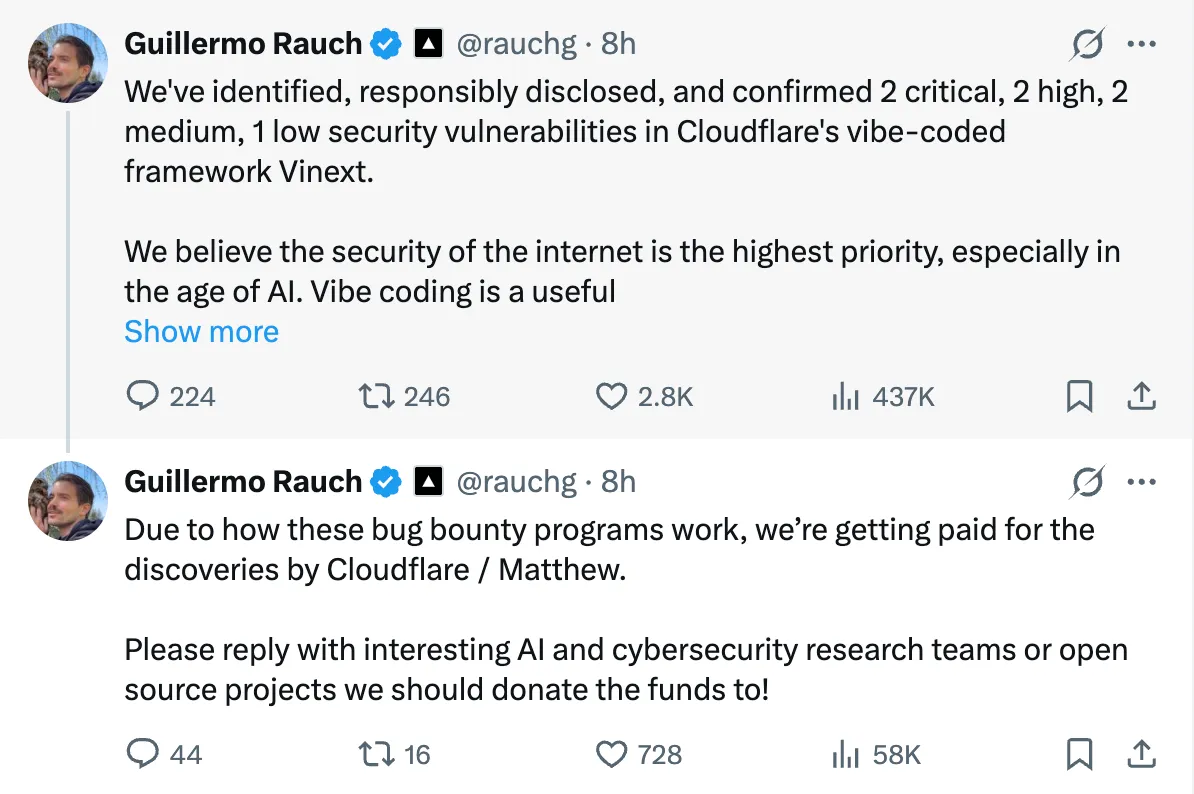

4. "AI slop" still an issue

There are questions about the quality of vinext, though.**** Vercel, naturally, is unhappy and hit out at the obvious weakness that vinext is unfit for production usage because it's insecure. Vercel CEO, Guillermo Rauch, did not miss a beat by tying Cloudflare's effort to the "vibe coding" stereotype of sloppy work executed with a lack of understanding:

Guillermo

Rauch on

X

Guillermo

Rauch on

XGuillermo has a point: anyone who stopped reading Cloudflare's launch announcement after the first few sentences would assume it's production-ready, with the first paragraph of this announcement closing with:

"And we already have customers running it in production."

However, Cloudflare doesn't share the rather crucial detail that "running in production" means that vinext has been deployed onto a beta site, until more than 1,000 words (around 2-3 pages) into the announcement:

"We want to be clear: vinext is experimental. It's not even one week old, and it has not yet been battle-tested with any meaningful traffic at scale. (...)

We've been working with National Design Studio, a team that's aiming to modernize every government interface, on one of their beta sites , CIO.gov.

Oh. So, "customers running it in production" at Cloudflare apparently means "customer running a beta site in production without meaningful traffic." This is a first from the infrastructure giant, which usually prides itself on accurate statements!

This detail was also absent when Cloudflare's CEO and CTO were boosting vinext like it was a mature, battle-tested product. In that context, Vercel's raising of the issue of security vulnerabilities is more than fair game, in my view.

Still, all that doesn't alter the core learning from this project: that AI has the power to drastically reduce engineering time by up to ~100x and deliver usable-enough output, for relatively negligible financial cost. Just keep in mind that security and reliability issues will probably take plenty of extra time and effort to address.

5. New attack vector on commercial open source?

If arch-rivalries exist in tech, then Cloudflare and Vercel are a prime example. Both are gunning to become the most popular platform for developers to deploy their code, and the CEOs are regularly seen in public taking shots at the other side. One such spat happened in March, as covered at the time:

"Things kicked off on social media, with developers confused about the severity of the incident, and about why Next.js seemed silent, and also why Cloudflare sites were breaking due to its fix for the CVE causing its own issues. It was at that point that Cloudflare's CEO, Matthew Prince, entered the chat to accuse Vercel of not caring about security:

Given the security incident was ongoing, this felt a bit "below the belt" by the Cloudflare chief. Criticizing rivals is fair game, but why not wait until the incident is over? The punch landed, and Vercel's CEO Guillermo Rauch is not someone to take it lying down, so he hit back.

Cloudflare's CEO then responded with a cartoon implying that although Vercel is much larger than its competitor Netlify, Cloudflare is 100x bigger than both, and could stomp them into the ground at will."

Serving the public interest wasn't why Cloudflare rewrote Next.js: they did it because they want Next.js sites to be deployed onto Cloudflare, but doing so made little sense until now because Next.js produced bespoke build output optimized for Vercel's infrastructure. With this change, Cloudflare claims it provides _superior _performance when hosting Next.js apps, according to their own measurements.

I 'd just add that performance is important for developers, but other things matter, too. Cost, reliability, developer experience, and how much devs like a company, are all factors in choosing between vendors. Also, performance measurements from a vendor about its own service must be taken with a large pinch of salt.

Zooming out from this episode, it seems that AI is bringing the value of existing commercial open source moats into question. Vercel carved out a clever open source strategy that helped turn its open source investment into business revenue:

- Build and maintain Next.js, delivering the best developer experience (DX).

- Optimize Vercel to serve the specific (and undocumented) build output of Next.js.

- Most developers onboarding to Next.js will decide to deploy on Vercel to get the most benefit, in terms of DX and performance.

- … repeat for years while the business becomes worth billions! (Vercel was valued at $9B last October).

Underpinning this success are some assumptions:

- Next.js will remain the #1 choice for developers to build React applications, thanks to ongoing investment.

- It is expensive to rewrite Next.js to be deployable and performant on another cloud vendor.

- Even if someone did #2, developers would be skeptical and not switch over.

Vercel can invest in #1 to keep Next as best-in-class, while knowing that the risk of #2 occurring is minor. However, Cloudflare has now "cloned" Next, and can easily keep up with all changes in the future, and port them back to vinext.

But AI makes it trivial to "piggyback" off any commercial open source project, which is a massive problem for commercial open source startups. It puts all the effort and investment into building and maintaining Next.js, while Cloudflare enjoys the benefit of this hard work (the Next.js public API) which is easily deployable to Cloudflare, and it can now undercut Vercel on price. For all future Next.js changes, Cloudflare will just sync it to vinext, using AI!

WordPress had a similar problem, with WP Engine "piggybacking" off its work and undercutting their pricing in 2024. As I analyzed at the time:

"Free-riding on permissive open source is too tempting to pass on for other vendors. WP Engine uses a common loophole of contributing almost nothing in R&D to WordPress, while selling it as a managed service. This means that they could either easily undercut the pricing of larger players like Automattic which do spend on WordPress's R&D. Alternatively, a company like WP Engine could charge as much, or more, as Automattic, but be able to spend a lot more on marketing, while being similarly profitable. "Saving" on R&D gives the "free-riders" plenty of options to grow their businesses: options not necessarily open to Automattic while they invest as much into R&D as they do.

Commercial open source vendors pressure to end "freeriding". Automattic is likely facing lower revenue growth, with customers choosing vendors like WP Engine which offer a similar service -- getting these customers either via a cheaper price or thanks to more marketing spend. This legal fight could be an effort to force WP Engine to stop eating Automattic's lunch, or perhaps get WP Engine to sell to Automattic, which would cement its leading status in managed Wordpress, while also boosting revenue by $400M a year - according to its own figures".

Vercel managed to avoid the "free-riding" problem with Next.js, but that's no longer possible now that AI makes it trivial to rewrite.

6. Defense or offense?

How should commercial open source companies respond to the threat that a competitor can easily rewrite the software behind the managed solutions which they sell as services?

One obvious response is to make tests private, so that replication is harder for AI. One thing that made it so easy for Cloudflare to rewrite Next was the project's comprehensive test suite. From their announcement __(emphasis mine):

"We also want to acknowledge the Next.js team. They've spent years building a framework that raised the bar for what React development could look like. The fact that their API surface is so well-documented and their test suite so comprehensive is a big part of what made this project possible."

Database solution SQLite is famous for its incredible test suite. What some people don't know is that while core SQLite tests are open source, its most comprehensive test suite - TH3 - is closed source. SQLite monetizes its advanced infrastructure as a service for purchase. This is a fair tradeoff: for most contributors, the basic open source tests work well enough. For enterprise users or customers who really care about correctness, it makes sense to purchase advanced testing services from the service's creator.

Open source canvas project, tldraw, announced it will relocate its test suite to a closed source repository; a move which makes plenty of sense. Here's commentary from Simon Willison:

"It's become very apparent over the past few months that a comprehensive test suite is enough to build a completely fresh implementation of any open source library from scratch, potentially in a different language."

In the event, tldraw's announcement turned out to be a joke, but who's laughing now? An open source project with excellent tests is an easy target for an AI agent to execute a full rewrite of it.

Could new licenses be created for the AI era? Existing open source licenses were created on the assumption that humans read open source code, and humans modify it. Agents break that assumption.

Could we see new license types emerge to ban AI agents from modifying projects' source code? It seems pretty far-fetched and hard to implement, but not beyond the realms of possibility.

AI agents are still very new, and going mainstream in tech. Once they break into other industries, I wouldn't be surprised if legal frameworks are reworded to also apply to AI agents. If and when this happens, it would open the path for open source licenses to distinguish between agents and humans.

What is a moat, if code can be trivially ported? A team operating a popular open source project can no longer assume it's expensive to fork or to be completely rewritten, meaning it makes sense to focus on other moats, such as:

- Outstanding (paid) support. AI could make this much easier at a higher quality, if done right.

- Smaller open core, larger closed source part. "Open core" as a business model has been dominant for commercial open source: keep the core of the software open source, while advanced enterprise features are source available or closed source. I would expect more companies to move their additional services to closed source, not source available.

- In-person connection and community. Projects with a real-world community will form a sense of connection that goes beyond code. For example, it's hard to imagine vinext meetups popping up - whereas there are many Next.js communities.

- Infrastructure and hardware remains a massive moat. In a world where software is trivial to copy, infrastructure remains a moat. Commercial open source might make most sense for players that own and operate superior infrastructure layers than their rivals: and being able to offer lower cost, higher reliability, lower latency, higher performance, or a combination of these.

7. AI-world reality

One of the single best AI use cases is full-on rewrites of well-tested products. I estimate that AI sped up the creation of vinext by at least 100x, which is massive. But we don't really see efficiency boosts of anything like that with AI tools, in general. As Laura Tacho shared at The Pragmatic Summit in San Francisco, the average self-reported efficiency 'AI gain' seems to be circa 10%.

I suspect this vast chasm in efficiency boosts is because AI is many times more efficient at "no-brainer tasks" where correctness can be verified with tests, versus those which are more open ended or involve more creativity.

In general, tests are incredibly important for efficient AI usage. On The Pragmatic Engineer Podcast, Peter Steinberger stressed how important "closing the loop" in his developer flow is by instructing the AI to test itself, and ensuring the AI has tests to run that verify correctness.

Automated tests were always considered a best practice for creating maintainable code. Now, having a codebase with extensive tests is the baseline to make AI agents work productively for refactors, rewrites - or even adding new features and verifying that things did not break!

Vendors will start to deploy "migration AI agents" to move customers over to their own stacks. This got lost in Cloudflare's announcement, but it's important:

vinext includes an Agent Skill that handles migration for you. It works with Claude Code, OpenCode, Cursor, Codex, and dozens of other AI coding tools. Install it, open your Next.js project, and tell the AI to migrate:

> npx skills add cloudflare/vinext

Then open your Next.js project in any supported tool and say:

> migrate this project to vinext

The skill handles compatibility checking, dependency installation, config generation, and dev server startup. It knows what vinext supports and will flag anything that needs manual attention.

This is very clever from Cloudflare, and a true "AI-native" move. They have not only used AI to migrate Next.js, but also built an "AI plugin" (a skill) to help customers migrate their existing codebases over to vinext - and deploy on Cloudflare!

This move will surely be copied by other vendors, since migrations which are tedious for humans are much less effort with agents.

AI is making the tech industry more ruthless when it comes to business practices. Laura Tacho said something interesting at The Pragmatic Summit:

"AI is an accelerator, it's a multiplier, and it is moving organizations in different directions."

AI seems to be accelerating the ruthlessness of competition for customers and the speed at which this happens. In one week, Cloudflare rebuilt Next.js, and it's attacking Vercel full-on: claiming their "vibe coded" alternative is more performant and production-ready, and burying at the foot of the launch announcement the crucial information that vinext is very much experimental.

I sense vendors are realizing that there's a limited amount of time in which to use AI to their advantage, and some will decide to use it like Cloudflare has.

On the other hand, AI could be great news for non-commercial open source. AI presents as a threat to commercial open source because it removes existing moats which make code hard to fully rewrite. However, beyond that, AI could help non-commercial open source to thrive:

- With AI, it's easy to fork an open source project and keep the fork in-sync with the original.

- It's trivial to instruct AI to rewrite an open source project to another language or framework.

- …and it's equally trivial for AI to add features to a fork.

For these reasons, I believe there could be a lot more forks and rewrites to come, and more open source projects and code, in general.

Takeaways

Personally, I could not have imagined things changing this quickly in software. Rewriting Next.js in a single week, even to a version that is not quite there - but mostly works? This was out of the question as recently as a few months ago.

Things changed around last December, when Opus 4.5 and GPT-5.2 came out and proved capable of writing most of the code. What used to be expensive is now cheap - like rewriting complete projects - and we still need to learn what the "new" expensive parts of software engineering are.

All this is new territory for everyone. To succeed in the tech industry, you need to be able to capitalize upon change, as Cloudflare has clearly done in this case by making the most of an opportunity created by new technology. It's unclear how popular vinext will become, and how much of a moat Vercel has around the broader Next.js ecosystem, but I suspect that it'd take more than a Next rewrite to make Cloudflare into a viable Next.js platform-as-a-service provider.

-

🔗 r/Leeds Lunch in Leeds - Best value for money? rss

Thank you.

submitted by /u/Bright_Fill_4770

[link] [comments] -

🔗 r/Yorkshire Sunrise over Langsett Res nr Barnsley this Morning rss

| Didn’t see one person the whole walk round. Spring is on its way’ submitted by /u/Del_213

| Didn’t see one person the whole walk round. Spring is on its way’ submitted by /u/Del_213

[link] [comments]

---|--- -

🔗 r/Leeds Leeds is betting big on new bike lanes. Will people use them? rss

submitted by /u/djstimms

[link] [comments] -

🔗 r/Leeds Metal fans in Leeds rss

So I (31/m) am considering reviving an idea I had about a year ago for a meetup style group for metal fans in Leeds. I love black metal personally but don't really know anyone locally with similar music tastes. Idea is for gig meets and just general hangouts. Every 3/4weeks, give or take.

I'm aware of the Leeds rock + metal fans meetup group although that seems dead, I joined their WhatsApp and nothing but silence. If there is anything else similar already existing I'd be keen to find out about it. I don't plan on using the meetup platform as I am limited financially, and they charge subscription fees so if anyone has advice on alternative platforms I'd be very interested.

So, who's interested? Open to all fans of heavy music, 25+ preferred only as I'd feel awkward if it's just students or a generally younger crowd.

I'll create something and update this post, depending on feedback.

EDIT: I've made a WhatsApp group and will try arrange something for next week probably. I'll DM everyone who's commented so far, link available DM me for it. Don't want to post it to avoid it being flooded with bots.

submitted by /u/GhengisChasm

[link] [comments] -

🔗 Simon Willison Can coding agents relicense open source through a “clean room” implementation of code? rss

Over the past few months it's become clear that coding agents are extraordinarily good at building a weird version of a "clean room" implementation of code.

The most famous version of this pattern is when Compaq created a clean-room clone of the IBM BIOS back in 1982. They had one team of engineers reverse engineer the BIOS to create a specification, then handed that specification to another team to build a new ground-up version.

This process used to take multiple teams of engineers weeks or months to complete. Coding agents can do a version of this in hours - I experimented with a variant of this pattern against JustHTML back in December.

There are a lot of open questions about this, both ethically and legally. These appear to be coming to a head in the venerable chardet Python library.

chardetwas created by Mark Pilgrim back in 2006 and released under the LGPL. Mark retired from public internet life in 2011 and chardet's maintenance was taken over by others, most notably Dan Blanchard who has been responsible for every release since 1.1 in July 2012.Two days ago Dan released chardet 7.0.0 with the following note in the release notes:

Ground-up, MIT-licensed rewrite of chardet. Same package name, same public API — drop-in replacement for chardet 5.x/6.x. Just way faster and more accurate!

Yesterday Mark Pilgrim opened #327: No right to relicense this project:

[...] First off, I would like to thank the current maintainers and everyone who has contributed to and improved this project over the years. Truly a Free Software success story.

However, it has been brought to my attention that, in the release 7.0.0, the maintainers claim to have the right to "relicense" the project. They have no such right; doing so is an explicit violation of the LGPL. Licensed code, when modified, must be released under the same LGPL license. Their claim that it is a "complete rewrite" is irrelevant, since they had ample exposure to the originally licensed code (i.e. this is not a "clean room" implementation). Adding a fancy code generator into the mix does not somehow grant them any additional rights.

Dan's lengthy reply included:

You're right that I have had extensive exposure to the original codebase: I've been maintaining it for over a decade. A traditional clean-room approach involves a strict separation between people with knowledge of the original and people writing the new implementation, and that separation did not exist here.

However, the purpose of clean-room methodology is to ensure the resulting code is not a derivative work of the original. It is a means to an end, not the end itself. In this case, I can demonstrate that the end result is the same — the new code is structurally independent of the old code — through direct measurement rather than process guarantees alone.

Dan goes on to present results from the JPlag tool - which describes itself as "State-of-the-Art Source Code Plagiarism & Collusion Detection" - showing that the new 7.0.0 release has a max similarity of 1.29% with the previous release and 0.64% with the 1.1 version. Other release versions had similarities more in the 80-93% range.

He then shares critical details about his process, highlights mine:

For full transparency, here's how the rewrite was conducted. I used the superpowers brainstorming skill to create a design document specifying the architecture and approach I wanted based on the following requirements I had for the rewrite [...]

I then started in an empty repository with no access to the old source tree, and explicitly instructed Claude not to base anything on LGPL/GPL-licensed code. I then reviewed, tested, and iterated on every piece of the result using Claude. [...]

I understand this is a new and uncomfortable area, and that using AI tools in the rewrite of a long-standing open source project raises legitimate questions. But the evidence here is clear: 7.0 is an independent work, not a derivative of the LGPL-licensed codebase. The MIT license applies to it legitimately.

Since the rewrite was conducted using Claude Code there are a whole lot of interesting artifacts available in the repo. 2026-02-25-chardet-rewrite-plan.md is particularly detailed, stepping through each stage of the rewrite process in turn - starting with the tests, then fleshing out the planned replacement code.

There are several twists that make this case particularly hard to confidently resolve:

- Dan has been immersed in chardet for over a decade, and has clearly been strongly influenced by the original codebase.

- There is one example where Claude Code referenced parts of the codebase while it worked, as shown in the plan - it looked at metadata/charsets.py, a file that lists charsets and their properties expressed as a dictionary of dataclasses.

- More complicated: Claude itself was very likely trained on chardet as part of its enormous quantity of training data - though we have no way of confirming this for sure. Can a model trained on a codebase produce a morally or legally defensible clean-room implementation?

- As discussed in this issue from 2014 (where Dan first openly contemplated a license change) Mark Pilgrim's original code was a manual port from C to Python of Mozilla's MPL-licensed character detection library.

- How significant is the fact that the new release of chardet used the same PyPI package name as the old one? Would a fresh release under a new name have been more defensible?

I have no idea how this one is going to play out. I'm personally leaning towards the idea that the rewrite is legitimate, but the arguments on both sides of this are entirely credible.

I see this as a microcosm of the larger question around coding agents for fresh implementations of existing, mature code. This question is hitting the open source world first, but I expect it will soon start showing up in Compaq-like scenarios in the commercial world.

Once commercial companies see that their closely held IP is under threat I expect we'll see some well-funded litigation.

Update 6th March 2026: A detail that's worth emphasizing is that Dan does not claim that the new implementation is a pure "clean room" rewrite. Quoting his comment again:

A traditional clean-room approach involves a strict separation between people with knowledge of the original and people writing the new implementation, and that separation did not exist here.

I can't find it now, but I saw a comment somewhere that pointed out the absurdity of Dan being blocked from working on a new implementation of character detection as a result of the volunteer effort he put into helping to maintain an existing open source library in that domain.

I enjoyed Armin's take on this situation in AI And The Ship of Theseus, in particular:

There are huge consequences to this. When the cost of generating code goes down that much, and we can re-implement it from test suites alone, what does that mean for the future of software? Will we see a lot of software re-emerging under more permissive licenses? Will we see a lot of proprietary software re-emerging as open source? Will we see a lot of software re-emerging as proprietary?

You are only seeing the long-form articles from my blog. Subscribe to /atom/everything/ to get all of my posts, or take a look at my other subscription options.

-

🔗 r/Harrogate Channel 4's 'The Dog House' is Looking for Loving Homes in Harrogate rss

| Hi everyone!😊 I'm part of the team behind Channel 4's The Dog House and I'm wondering whether this might be of interest to anyone here? We're looking for dog lovers in Harrogate who could offer a loving home and a fresh new start to a rescue dog in need for our next series filming in spring. Filmed in partnership with Woodgreen Pets Charity, the series shines a light on how life-changing the bond between humans and dogs can be for both sides. If you're interested to apply you can do so at: https://c4thedoghousetakepart.co.uk/ I've also included our flyer, in case anyone would like to share it with others they might know. submitted by /u/Fallevo

| Hi everyone!😊 I'm part of the team behind Channel 4's The Dog House and I'm wondering whether this might be of interest to anyone here? We're looking for dog lovers in Harrogate who could offer a loving home and a fresh new start to a rescue dog in need for our next series filming in spring. Filmed in partnership with Woodgreen Pets Charity, the series shines a light on how life-changing the bond between humans and dogs can be for both sides. If you're interested to apply you can do so at: https://c4thedoghousetakepart.co.uk/ I've also included our flyer, in case anyone would like to share it with others they might know. submitted by /u/Fallevo

[link] [comments]

---|--- -

🔗 r/wiesbaden Auto Lackierer rss

Hallo liebe Wiesbadener,

kann jemand einen Auto-Lackierer empfehlen hier in der Gegend?

submitted by /u/nikitsolo

[link] [comments] -

🔗 r/LocalLLaMA Ran Qwen 3.5 9B on M1 Pro (16GB) as an actual agent, not just a chat demo. Honest results. rss

| Quick context: I run a personal automation system built on Claude Code. It's model-agnostic, so switching to Ollama was a one-line config change, nothing else needed to change. I pointed it at Qwen 3.5 9B and ran real tasks from my actual queue. Hardware: M1 Pro MacBook, 16 GB unified memory. Not a Mac Studio, just a regular laptop. Setup: brew install ollama ollama pull qwen3.5:9b ollama run qwen3.5:9b Ollama exposes an OpenAI-compatible API at localhost:11434. Anything targeting the OpenAI format just points there. No code changes. What actually happened: Memory recall : worked well. My agent reads structured memory files and surfaces relevant context. Qwen handled this correctly. For "read this file, find the relevant part, report it" type tasks, 9B is genuinely fine. Tool calling : reasonable on straightforward requests. It invoked the right tools most of the time on simple agentic tasks. This matters more than text quality when you're running automation. Creative and complex reasoning : noticeable gap. Not a surprise. The point isn't comparing it to Opus. It's whether it can handle a real subset of agent work without touching a cloud API. It can. The slowness was within acceptable range. Aware of it, not punished by it. Bonus: iPhone Ran Qwen 0.8B and 2B on iPhone 17 Pro via PocketPal AI (free, open source, on the App Store). Download the model once over Wi-Fi, then enable airplane mode. It still responds. Nothing left the device. The tiny models have obvious limits. But the fact that this is even possible on hardware you already own in 2026 feels like a threshold has been crossed. The actual framing: This isn't "local AI competes with Claude." It's "not every agent task needs a frontier model." A lot of what agent systems do is genuinely simple: read a file, format output, summarize a short note, route a request. That runs locally without paying per token or sending anything anywhere. The privacy angle is also real if you're building on personal data. I'm curious what hardware others are running 9B models on, and whether anyone has integrated them into actual agent pipelines vs. just using them for chat. Full write-up with more detail on the specific tasks and the cost routing angle: https://thoughts.jock.pl/p/local-llm-macbook-iphone-qwen-experiment submitted by /u/Joozio

| Quick context: I run a personal automation system built on Claude Code. It's model-agnostic, so switching to Ollama was a one-line config change, nothing else needed to change. I pointed it at Qwen 3.5 9B and ran real tasks from my actual queue. Hardware: M1 Pro MacBook, 16 GB unified memory. Not a Mac Studio, just a regular laptop. Setup: brew install ollama ollama pull qwen3.5:9b ollama run qwen3.5:9b Ollama exposes an OpenAI-compatible API at localhost:11434. Anything targeting the OpenAI format just points there. No code changes. What actually happened: Memory recall : worked well. My agent reads structured memory files and surfaces relevant context. Qwen handled this correctly. For "read this file, find the relevant part, report it" type tasks, 9B is genuinely fine. Tool calling : reasonable on straightforward requests. It invoked the right tools most of the time on simple agentic tasks. This matters more than text quality when you're running automation. Creative and complex reasoning : noticeable gap. Not a surprise. The point isn't comparing it to Opus. It's whether it can handle a real subset of agent work without touching a cloud API. It can. The slowness was within acceptable range. Aware of it, not punished by it. Bonus: iPhone Ran Qwen 0.8B and 2B on iPhone 17 Pro via PocketPal AI (free, open source, on the App Store). Download the model once over Wi-Fi, then enable airplane mode. It still responds. Nothing left the device. The tiny models have obvious limits. But the fact that this is even possible on hardware you already own in 2026 feels like a threshold has been crossed. The actual framing: This isn't "local AI competes with Claude." It's "not every agent task needs a frontier model." A lot of what agent systems do is genuinely simple: read a file, format output, summarize a short note, route a request. That runs locally without paying per token or sending anything anywhere. The privacy angle is also real if you're building on personal data. I'm curious what hardware others are running 9B models on, and whether anyone has integrated them into actual agent pipelines vs. just using them for chat. Full write-up with more detail on the specific tasks and the cost routing angle: https://thoughts.jock.pl/p/local-llm-macbook-iphone-qwen-experiment submitted by /u/Joozio

[link] [comments]

---|--- -

🔗 r/LocalLLaMA Final Qwen3.5 Unsloth GGUF Update! rss

| Hey r/LocalLLaMA this week we worked on further improving the best size/KLD tradeoff for Qwen3.5, and we’re excited to share new GGUF benchmarks for Qwen3.5-122B-A10B and Qwen3.5-35B-A3B (99.9% KL divergence). This will likely be our final GGUF update. We’re also deeply saddened by the news around the Qwen team, and incredibly grateful for everything they’ve done for the open source community! For a lot of model releases, they had to stay up all night and not sleep.

| Hey r/LocalLLaMA this week we worked on further improving the best size/KLD tradeoff for Qwen3.5, and we’re excited to share new GGUF benchmarks for Qwen3.5-122B-A10B and Qwen3.5-35B-A3B (99.9% KL divergence). This will likely be our final GGUF update. We’re also deeply saddened by the news around the Qwen team, and incredibly grateful for everything they’ve done for the open source community! For a lot of model releases, they had to stay up all night and not sleep.- All GGUFs now use our new imatrix calibration dataset so you might see small improvements in chat, coding, long context, and tool-calling use-cases. We are always manually improving this dataset and it will change often.

- This is a follow up to https://www.reddit.com/r/LocalLLaMA/comments/1rgel19/new_qwen3535ba3b_unsloth_dynamic_ggufs_benchmarks/

- We further enhanced our quantization method for Qwen3.5 MoEs to reduce Maximum KLD directly. 99.9% is what is generally used, but for massive outliers, Maximum KLD can be useful. Our New method generally pushes the Maximum KLD quite a much down vs the pre March 5th update. UD-Q4_K_XL is 8% bigger, but reduces maximum KLD by 51%!

| Quant | Old GB | New GB | Max KLD Old | Max KLD New

---|---|---|---|---

UD-Q2_K_XL | 12.0 | 11.3 (-6%) | 8.237 | 8.155 (-1%)

UD-Q3_K_XL | 16.1 | 15.5 (-4%) | 5.505 | 5.146 (-6.5%)

UD-Q4_K_XL | 19.2 | 20.7 (+8%) | 5.894 | 2.877 (-51%)

UD-Q5_K_XL | 23.2 | 24.6 (+6%) | 5.536 | 3.210 (-42%)- Re-download Qwen3.5-35B-A3B , 27B , and 122B-A10B as they're now all updated. Re-download 397B-A17B after today’s update (still uploading!)

- Qwen3.5-27B and 122B-A10B include the earlier chat template fixes for better tool-calling/coding output. 397B-A17B will also be updated today to include this.

- LM Studio now supports toggling “thinking” for our GGUFs. Read our guide or run

lms get unsloth/qwen3.5-4b. This process will be easier very soon. - Benchmarks were conducted using the latest versions for every GGUF provider.

- Replaced BF16 layers with F16 for faster inference on unsupported devices.

- Qwen3.5-35B-A3B now has all variants (Q4_K_M, Q8_0, BF16, etc.) uploaded.

- A reminder KLD and perplexity benchmarks does not exactly reflect real-world use-cases.

- Links to new GGUFs: Qwen3.5-35B-A3B-GGUF, Qwen3.5-122B-A10B-GGUF, Qwen3.5-397B-A17B-GGUF (397B still uploading!)

You can also now Fine-tune Qwen3.5 in Unsloth via our free notebooks! Thanks a lot everyone!

submitted by /u/danielhanchen

[link] [comments] -

🔗 r/york misty walk this morning :) rss

| it was like a lovely dream submitted by /u/whtmynm

| it was like a lovely dream submitted by /u/whtmynm

[link] [comments]

---|--- -

🔗 r/wiesbaden Smoke Together 2.0 rss

Hello everyone, since only one person showed up to the last meeting, which was probably due to the weather, I thought we could try again tomorrow around 6 pm, at the same spot on Kirchenpfad: 50.083057, 8.216951

Who's interested?

submitted by /u/Wide-Distribution-78

[link] [comments] -

🔗 r/Leeds Kirkstall Abbey rss

Song is ‘Departure’ by IHF

submitted by /u/mr_errington

[link] [comments] -

🔗 r/Leeds Did Mr sunshine call in sick today... rss

Weather forecasted alll day sun!

submitted by /u/newtobitcoin111

[link] [comments] -

🔗 r/wiesbaden AFD Veranstaltung wurde abgesagt 🤝 rss

submitted by /u/Chris0607

[link] [comments] -

🔗 r/LocalLLaMA Qwen3 vs Qwen3.5 performance rss

| Note that dense models use their listed parameter size (e.g., 27B), while Mixture-of-Experts models (e.g., 397B A17B) are converted to an effective size using ( \sqrt{\text{total} \times \text{active}} ) to approximate their compute-equivalent scale. Data source: https://artificialanalysis.ai/leaderboards/models submitted by /u/Balance-

| Note that dense models use their listed parameter size (e.g., 27B), while Mixture-of-Experts models (e.g., 397B A17B) are converted to an effective size using ( \sqrt{\text{total} \times \text{active}} ) to approximate their compute-equivalent scale. Data source: https://artificialanalysis.ai/leaderboards/models submitted by /u/Balance-

[link] [comments]

---|--- -

🔗 r/Leeds Anyone know what they're filming at Browns? rss

Saw some riggers working on Browns yesterday blocking out all the windows. Anyone know what's being filmed?

submitted by /u/Itsalladeepend

[link] [comments] -

🔗 r/Leeds WFH / Remote Work Advice rss

Hello! Hoping for some advice on co-working in Leeds.

I'm M 32, I work in Consumer Goods/Tech and I am 100% WFH and while the convenience is incredible, the isolation can be a challenge. I would like to establish a solid rhythm of working from town a few days a week and even better, find other people wanting to do the same and have a bit of craic. Grab lunch, beer afterwards etc.

I've tried a few co-working spaces in Leeds but haven't found something that feels sustainable, yet.

What i've tried so far:

Santander work cafe is great.

Its free, but it doesn't open til 9 and they often host events making it hard to establish a solid routine. I have a lot of calls, so I found that difficult to manage while working in there.

2Work is great but is expensive.

At £20 a go, that becomes £30 after train/parking. It's great if i'm meeting friends after work but not something i could make a regular routine of.

Waterlane Boathouse is good too, but I couldn't do a full 9-5 there and its hard to take calls. Obvs, it's a pub not a corporate office.

What i'm looking for:

- A space where I can reliably get a desk and would be able to take calls throughout the day

-

The cheaper the better

-

Parking would be ideal

If you're in a similar position with WFH/Remote and want to find community during the week please drop me a line!

submitted by /u/Longjumping-Stop-662

[link] [comments] -

-

🔗 r/reverseengineering DLLHijackHunter v1.2.0 - Now with automated UAC Bypass & COM AutoElevation discovery rss

submitted by /u/Jayendra_J

[link] [comments] -

🔗 r/Leeds 38/39 Meanwood buses rss

Why have these been changed to single deckers? The amount of full buses that drive past because they’re not a double decker! Trying to drive less to save money on petrol but my god first bus are making it hard

submitted by /u/sm9981

[link] [comments] -

🔗 Project Zero On the Effectiveness of Mutational Grammar Fuzzing rss

Mutational grammar fuzzing is a fuzzing technique in which the fuzzer uses a predefined grammar that describes the structure of the samples. When a sample gets mutated, the mutations happen in such a way that any resulting samples still adhere to the grammar rules, thus the structure of the samples gets maintained by the mutation process. In case of coverage-guided grammar fuzzing, if the resulting sample (after the mutation) triggers previously unseen code coverage, this sample is saved to the sample corpus and used as a basis for future mutations.

This technique has proven capable of finding complex issues and I have used it successfully in the past, including to find issues in XSLT implementations in web browsers and even JIT engine bugs.

However, despite the approach being effective, it is not without its flaws which, for a casual fuzzer user, might not be obvious. In this blogpost I will introduce what I perceive to be the flaws of the mutational coverage-guided grammar fuzzing approach. I will also describe a very simple but effective technique I use in my fuzzing runs to counter these flaws.

Please note that while this blogpost focuses on grammar fuzzing, the issues discussed here are not limited to grammar fuzzing as they also affect other structure-aware fuzzing techniques to various degrees. This research is based on the grammar fuzzing implementation in my Jackalope fuzzer, but the issues are not implementation specific.

Issue #1: More coverage does not mean more bugs

The fact that coverage is not a great measure for finding bugs is well known and affects coverage-guided fuzzing in general, not just grammar fuzzing. However this tends to be more problematic for the types of targets where structure-aware fuzzing (including grammar fuzzing) is typically used, such as in language fuzzing. Let’s demonstrate this on an example:

In language fuzzing, bugs often require functions to be called in a certain order or that a result of one function is used as an input to another function. To trigger a recent bug in libxslt two XPath functions need to be called, the document() function and the generate-id() function, where the result of the document() function is used as an input to generate-id() function. There are other requirements to trigger the bug, but for now let’s focus on this requirement.

Here’s a somewhat minimal sample required to trigger the bug:

<?xml version="1.0"?> <xsl:stylesheet xml:base="#" version="1.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"> <xsl:template match="/"> <xsl:value-of select="generate-id(document('')/xsl:stylesheet/xsl:template/xsl:message)" /> <xsl:message terminate="no"></xsl:message> </xsl:template> </xsl:stylesheet>With the most relevant part for this discussion being the following element and the XPath expression in the select attribute:

<xsl:value-of select="generate-id(document('')/xsl:stylesheet/xsl:template/xsl:message)" />If you run a mutational, coverage guided fuzzer capable of generating XSLT stylesheets, what it might do is generate two separate samples containing the following snippets:

Sample 1:

<xsl:value-of select="document('')/xsl:stylesheet/xsl:template/xsl:message" />Sample 2:

<xsl:value-of select="generate-id(/a)" />The union of these two samples’ coverage is going to be the same as the coverage of the buggy sample, however having document() and generate-id() in two different samples in the corpus isn’t really helpful for triggering the bug.

It is also possible for the fuzzer to generate a single sample with both of these functions that again results in the same coverage as the buggy sample, but with both functions operating on independent data:

<xsl:template match="/"> ... <xsl:value-of select="document('')/xsl:stylesheet/xsl:template/xsl:message" /> <xsl:value-of select="generate-id(/a)" /> ... </xsl:template>This issue also demonstrates how crucial it is for any fuzzer to be able to combine multiple samples in the corpus in order to produce new samples. However, in this case, note that combining the two samples wouldn’t trigger any previously unseen coverage and thus the resulting sample wouldn’t be saved, despite climbing closer to triggering the bug.

In this case, because triggering the bug requires chaining only two function calls, a fuzzer would eventually find this bug by randomly combining the samples. But in case three or more function calls need to be chained in order to trigger the bug, it becomes increasingly expensive to do so and coverage feedback, as demonstrated, does not really help.

In fact, triggering this bug might be easier (or equally easy) with a generative fuzzer (that will generate a new sample from scratch every time) without coverage feedback. But even though coverage feedback is not ideal, it still helps in a lot of cases.

As previously stated, this issue does not only affect grammar fuzzing, but also other fuzzing approaches, in particular those focused on language fuzzing. For example, Fuzzilli documentation describes a similar version of this problem.

A possible solution for this problem would be having some kind of dataflow coverage that could identify that data flowing from document() into generate- id() is something previously unseen and worth saving, however I am not aware of any practical implementation of such an approach.

Issue #2: Mutational grammar fuzzing tends to produce samples that are very

similar

To demonstrate this issue, let’s take a look at some samples from one of my XSLT fuzzing sessions:

Part of sample 1128 in the corpus:

<?xml version="1.0" encoding="UTF-8"?><xsl:fallback namespace="http://www.w3.org/url2" ><aaa ></aaa><ddd xml:id="{lxl:node-set($name2)}:" att3="{[$name4document('')att4.|document('')$name4namespace::]document('')}{ns2}" ></ns3:aaa></xsl:fallback>Part of sample 603 in the corpus:

<?xml version="1.0" encoding="UTF-8"?><xsl:fallback namespace="http://www.w3.org/url2" ><aaa ></aaa><ddd xml:id="{lxl:node-set($name2)}:" att3="{[$name4document('')att4.|document('')$name4namespace::]document('')}{ns2}" xmlns:xsl="http://www.w3.org/url3" ><xsl:output ></xsl:output>eHhDC?^5=<xsl:choose elements="eee" ><xsl:copy stylesheet-prefix="ns3" priority="3" ></xsl:copy></xsl:choose></ddd>t</xsl:fallback>As you can see from the example, even though these two samples are different and come from different points in time during the fuzzing session, a large part of these two samples are the same.

This follows from the greedy nature of mutational coverage guided fuzzing: when a sample is mutated to produce new coverage, it gets immediately saved to the corpus. Likely a large part of the original sample wasn’t mutated, but it is still part of the new sample so it gets saved. This new sample can get mutated again and if the resulting (third) sample triggers new coverage it will also get saved, despite large similarities with the starting sample. This results in a general lack of diversity in a corpus produced by mutational fuzzing.

While Jackalope’s grammar mutator can also ignore the base sample and generate an entire sample from scratch, it is rare for this to trigger new coverage compared to the more localized mutations, especially later on in the fuzzing session.

One approach of combating this issue could be to minimize each new sample so that only the part that triggers new coverage gets saved, but I observed that this isn’t an optimal strategy either and it’s beneficial to leave (some) of the original sample. Jackalope implements this by minimizing each grammar sample, but stops the minimization when a certain number of grammar tokens has been reached.

Even though this blogpost focuses on grammar fuzzing, I observed this issue with other structure aware fuzzers as well.

A simple solution?

Both of these issues hint that there might be benefits of combining generative fuzzing with mutational fuzzing in some way. Generative fuzzing produces more diverse samples than mutational fuzzing but suffers from other issues such as that it typically generates lots of samples that trigger errors in the target. Additionally, as stated previously, although coverage is not an ideal criteria for finding bugs it is still helpful in a lot of cases.

In the past, when I was doing grammar fuzzing on a large number of machines, an approach I used was to delay syncing individual fuzz workers. That way, each worker would initially work with its own (fully independent) corpus. Only after some time has passed, the fuzzers would exchange sample sets and each worker would get the samples that correspond to the coverage this worker is missing.

But what to do when fuzzing on a single machine? During my XSLT fuzzing project, I used the following approach:

-

Start a fuzzing worker with an empty corpus. Run for T seconds.

-

After T seconds sync the worker with the fuzzing server. Get the missing coverage and corresponding samples from the server. Upload any coverage the server doesn’t have (and the corresponding samples) to the server.

-

Run with combined corpus (generated by the worker + obtained from the server) for another T seconds.

-

Sync with the server again (to upload any new samples) and shut down the worker.

-

Go back to step 1.

The result is that the fuzzing worker spends half of the time creating a fully independent corpus generated from scratch and half of the time working on a larger corpus that also incorporates interesting samples (as measured by the coverage) from the previous workers. This results in more sample diversity as each new generation is independent from the previous one. However the worker eventually still ends up with a sample set corresponding to the full coverage seen so far during any worker lifetime. Ideally, new coverage and, more importantly, new bugs can be found by combining the fresh samples from the current generation with samples from the previous generations.

In Jackalope, this can be implemented by first running the server, e.g.

/path/to/fuzzer -start_server 127.0.0.1:8337 -out serveroutAnd then running the workers sequentially with the following Python script:

import subprocess import time T = 3600 while True: subprocess.run(["rm", "-rf", "workerout"]) p = subprocess.Popen(["/path/to/fuzzer", "-grammar", "grammar.txt", "-instrumentation", "sancov", "-in", "empty", "-out", "workerout", "-t", "1000", "-delivery", "shmem", "-iterations", "10000", "-mute_child", "-nthreads", "6", "-server", "127.0.0.1:8337", "-server_update_interval", str(T), "--", "./harness", "-m", "@@"]) time.sleep(T * 2) p.kill()Note that Jackalope parameters in the script above are from my libxslt fuzzing run and should be adjusted according to the target.

Additionally, Jackalope implements the -skip_initial_server_sync flag to avoid syncing a worker with the server as soon as the worker starts, but this flag is now the default in grammar fuzzing mode so it does not need to be specified explicitly.

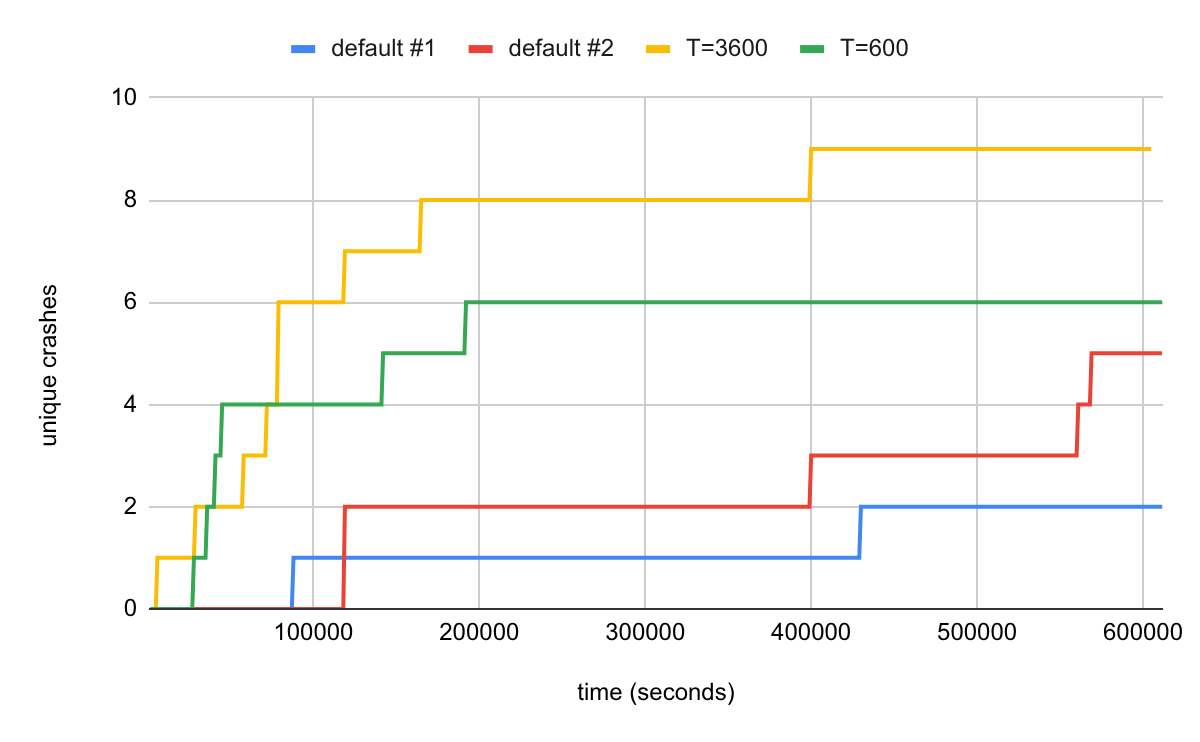

Does this trick work better than running a single uninterrupted fuzzing session? Let’s do some experiments. I used an older version of libxslt as the target (libxslt commit 2ee18b3517ca7144949858e40caf0bbf9ab274e5, libxml2 commit 5737466a31830c017867e3831a329c8f605c877b) and measured the number of unique crashes over time. Note that while the number of unique crashes does not directly correspond to the number of unique bugs, being able to trigger the same bug in different ways still gives a good indication of bug finding capabilities. I ran each session for one week on a single machine.

I ran two default experiments (with a single long-lived worker) as well as the two experiments with the proposed solution with different values of T, T=3600 (one hour) and T=600 (10 minutes).

As demonstrated in the chart, restarting the worker periodically (but keeping the server), as proposed in this blog post, helped uncover more unique crashes than either of the default sessions. The crashes were also found more quickly. The default sessions proved sensitive to starting conditions where one run discovered 5 but the other run only 2 unique crashes during the experiment time.

The value of T dictates how soon a worker will switch from working on only its own samples to working on its own + the server samples. The best value in the libxslt experiment (3600) is when the worker already found most of the “easy” coverage and discovered the corresponding samples. As can be seen from the experiment, different values of T can produce different results. The optimal value is likely target-dependent.

Conclusion

Although the trick described in this blogpost is very simple, it nevertheless worked surprisingly well and helped discover issues in libxslt quicker than I would likely be able to find using default settings. It also underlines the benefits of experimenting with different fuzzing setups according to the target specifics, rather than relying on tooling out-of-the-box.

Future work might include researching fuzzing strategies that favor novelty and would e.g. replace samples with the newer ones, even when doing so does not change the overall fuzzer coverage.

-

-

🔗 r/Yorkshire What’s in Mytholmroyd? rss

I’m in Mytholmroyd for work for a few hours today. What’s actually here? Anything I should see before I go?

Cheers!

submitted by /u/ANuggetEnthusiast

[link] [comments] -

🔗 MetaBrainz Remembering mayhem rss

Rob Kaye (also known to the community and his peers as ruaok and mayhem) was many things. Friend, partner, colleague, 'that guy with the crazy hair', hacker, burner, visionary and much more. And always a source of creative mayhem!

Millions more have used, contributed to, or benefited from his open-source vision and projects. There's no doubt that Rob was one of the spearheads of open-source. He championed open music data and showed the world that a non- profit open-source organisation could be financially viable, competing with (and far outliving most) similar corporate projects.

Below we will share some of Rob's history with MetaBrainz and staff. Thank you to everyone who left memories on the announcement post and elsewhere on the world wide web. His spirit lives on in our hearts and in 1's and 0's.

Rob and MetaBrainz

In the year 2000 a young Rob created MusicBrainz. He had just witnessed the corporatization of CDDB and embarked on the creation of a collaborative music database that could never be snatched from its contributors.

Young Rob ('the one with

the hair') in the ballpit at the old London Last.fm offices

Young Rob ('the one with

the hair') in the ballpit at the old London Last.fm officesFor over 25 years Rob guided MusicBrainz along its path, always focussed on his vision of openness and independence. He nestled his projects safely the non-profit arms of the MetaBrainz Foundation, to further safeguard them for the future. Since the year 2000 many of MusicBrainz' sister projects have bloomed under the MetaBrainz umbrella, such as MusicBrainz Picard, BookBrainz and ListenBrainz, with Rob either supporting community efforts or identifying a need and kickstarting them himself.

26 years after founding MusicBrainz, with 143,901,298 and growing MusicBrainz IDs serving billions of global requests and (relatively) young ListenBrainz already at 1 billion+ listens, there is no doubt that Rob’s open-source efforts have changed the landscape of music data and, by extension, human culture (which relies on open and accessible histories) and the lives of musicians. It’s changed not just for us die-hards who live “in” the MetaBrainz ecosystem, but also for the millions of people using the thousands of services that interact with MetaBrainz’ data. It’s probably no exaggeration to say that most people have interacted with MetaBrainz data at some point in their lives.

Fearless, peerless

Fearless, peerlessNone of this could have happened without Rob's fierce and immovable guard against corporate influence and the enshittification that has taken down so many of MetaBrainz' contemporaries over the decades. He would gleefully share stories of offers to "purchase" MetaBrainz and the ignorance of trying to spend money on something that has effectively been made utterly un-purchasable. He did not bend the knee to power - exemplified by his famous 'Amazon cake' endeavour.

Rob was a hacker at heart which made it all the more admirable that he spent much of his time dealing with the humdrum of what has become a substantial operation with a respectable row of servers and employees, all clamouring to be kept warm, dry, fed and paid, not to mention guiding 100's of students and new contributors through their first forays into open-source.

Robert Kaye and some of the MetaBrainz team in 2024

Rob was also an excellent delegator. Once you had Rob's trust he would let you cook, resulting in a wide range of incredible talent being incorporated into the MetaBrainz team. Rob was still coding whenever he could, but his excellent team allowed him to spend the free time that MetaBrainz' admin left him hacking on collaborations, experiments and anything else that caught his interest - for instance, recently he was spending some evenings working on MBID Mapper 2.0, looking forward to GSoC, and was excited about upcoming collaborations.

Rob will be outlived by what he built, just as he intended. Nothing will be able to replace the presence of that cheeky smile, but Rob's influence will still be felt when the monument to many a king would have crumbled.

The Captain and My Friend