In the “What’s New” document for Ghidra version 10.2 there’s a fascinating description of a new feature:

Machine Learning

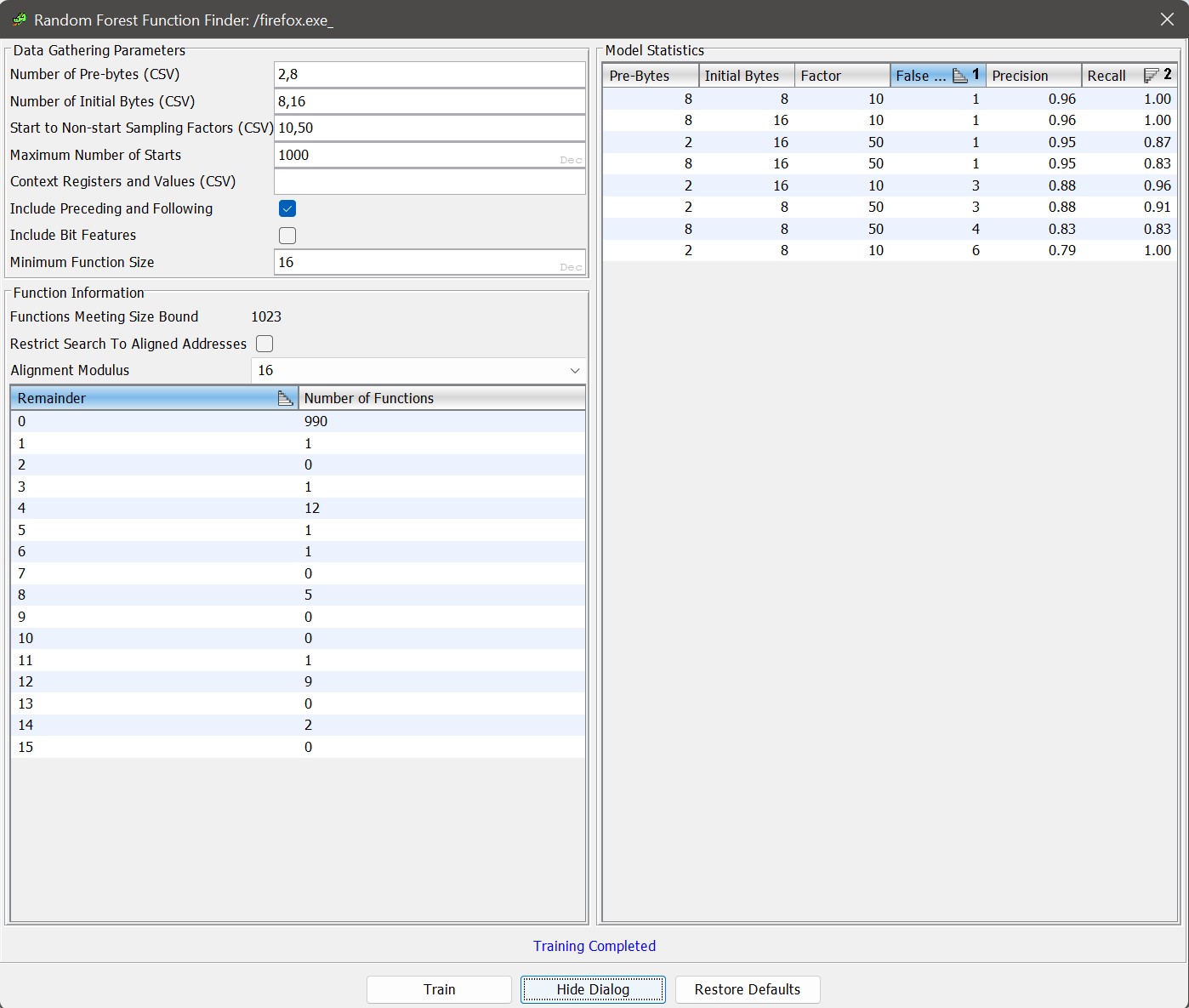

An optional MachineLearning extension has been added containing the Random Forest Function Finder Plugin. The plugin finds undiscovered functions within a binary using classifiers to identify potential function starts. The plugin trains classifiers using data sets created from known functions within a binary. These classifiers can then be used by the plugin on the original binary or other binaries to find additional functions missed by initial analysis.

The extension can be installed from the Ghidra Project Window via File->Install Extensions…

And, from the Change history:

Extensions. A MachineLearning extension has been added. This contains a plugin for finding code and functions in a binary by training on functions which have already been found. (GP-2204)

Deciding to classify an address as code and/or data is a challenging problem in reverse engineering. Many tools, Ghidra included, ship hardcoded signatures used to help find code and function start addresses. For example, Ghidra has the file x86win_patterns.xml that describes common function prologues on Intel x86:

<patternlist>

<patternpairs totalbits="32" postbits="16"> <!-- Main patterns -->

<prepatterns>

<data>0xcc</data> <!-- CC debug filler -->

<data>0xcccc</data> <!-- multiple CC filler bytes -->

<data>0x90</data> <!-- NOP filler -->

<data>0xc3</data> <!-- RET filler -->

<data>0xc9c3</data> <!-- LEAVE RET -->

<data>0xc2 ......00 0x00</data> <!-- RET longform -->

</prepatterns>

<postpatterns>

<data>0x558bec</data> <!-- PUSH EBP : MOV EBP,ESP -->

<data>0x83ec 0.....00 </data> <!-- SUBESP#small -->This is probably generated by the Function Bit Patterns Explorer Plugin:

The Function Bit Patterns Explorer Plugin is used to discover patterns in the bytes around function starts and returns. When analyzing a single program, such patterns can be used to discover new functions based on the functions that have already been found.

Anyways, the “machine learning” extension appears to be a new solution to this problem: generate a random forest classifier using sequences of code from the current module to decide if each address might be the start of a function. If the initial auto-analysis is able to reliably recover some code, such via recursive descent from the entry point and exports, then the byte patterns can be generalized from these examples.

Of course, this assumes that the undiscovered code looks similar to the discovered code. And, there’s the assumption that module doesn’t contain data that looks like valid function start code. I wonder if we can find a way to quantify and validate the performance of this technique?

Other questions:

- how well does a model trained on one binary work against another? Seems highly dependent upon compiler settings. But what about a collection of training binaries?

- the negative class examples are all code that aren’t function starts, not data. Does this introduce bias in the classifier?

- what’s the performance of scanning like? It scans for each byte, so this could be slow? But it could also be parallelized.

- how does the accuracy compare with the Bit Patterns mechanism? Is it more usable or automated? Why would you use one versus the other?

First, some background research:

“GP-2204” seems to be the internal issue tracker topic for the feature. It doesn’t correspond to ghidra#2204, which is about accessing the syntax tree of the decompilation window. Commit 956a276 by @ghidracadabra adds the feature: 52 changed files with 6,696 additions and 0 deletions.

The extension uses the Tribuo machine learning library for Java. This is what provides the random forest training and evaluation implementations.

Extension metadata from extension.properties:

name=MachineLearning

description=Finds functions using ML

author=Ghidra Team

createdOn=9/25/2022

version=@extversion@Here’s the built-in usage documentation via RandomForestFunctionFinderPlugin.htm. Take a moment to read all of it, since it touches on a number of configuration knobs that suggest how the classifier is intended to work.

Random Forest Function Finder Plugin

Random Forest Function Finder Plugin

This plugin trains models used to find function starts within a program. Essentially, the training set consists of addresses in a program where Ghidra's analysis was able to find functions. The models are then applied to the rest of the program. Models can also be applied to other programs.

In the motivating use case, you either don't know the toolchain which produced a program or do not have a large number of sample programs to train other types of models.

Note: in general, this plugin ensures that addresses used for training, testing, or searching for function starts are aligned relative to the processor's instruction alignment. Defined data within an executable block is an exception - all such bytes are added to the test set as examples of non-starts.

Basic Suggested Workflow

- To begin, select Search->For Code And Functions... from the Code Browser.

- Click the Train button to train models using the default parameters.

- Choose the model with the fewest false positives (which will be apparent from the Model Statistics table).

- Right-click on that model's row and select DEBUG - Show test set errors.

- Examine the resulting table to determine if there is a good cutoff for the probabilities. Note that some of the "errors" might not actually be errors of the model: see the discussion in Debug Model Table.

- If you're satisified with the performance of the model, right-click on the row and select Apply Model. If you aren't, you can try changing the parameters and training again. You can also try the Include Bit Features training option.

- In the resulting table, select all addresses with an Undefined interpretation whose probability is above your threshold, right-click, and select Disassemble. This will start disassembly (and follow-on analysis) at each selected address.

- Now, select all addresses whose interpretation is Block Start and whose probability of being a function is above your threshold, right-click, and select Create Function(s). It's also probably worth filtering out any addresses which are the targets of conditional references (which can be seen in the Conditional Flow Refs column).

The script FindFunctionsRFExampleScript.java shows how to access the functionality of this plugin programmatically.

Model Training Table

This table is the main interface for training and applying models.

Data Gathering Parameters

The values in this panel control the number of models trained and the data used to train them. The first three fields: Number of Pre-Bytes (CSV), Number of Initial Bytes (CSV), and Start to Non-start Sampling Factors (CSV) accept CSVs of positive integers as input (a single integer with no comma is allowed). Models corresponding to all possible choices of the three values will be trained and evaluated. That is, if you enter two values for the Pre-bytes field, three values for the Initial Bytes field, and four values for the Sampling Factors field, a total of 2*3*4 = 24 models will be trained and evaluated.

Number of Pre-bytes (CSV)

Values in this list control how many bytes before an address are used to construct its feature vector.

Number of Initial Bytes (CSV)

Values in this list control how many bytes are used to construct the feature vector of an address, starting at the address.

Start to Non-start Sampling Factors (CSV)

Values in this list control how many non-starts (i.e., addresses in the interiors of functions) are added to the training set for each function start in the training set.

Maximum Number Of Starts

This field controls the maximum number of function starts that are added to the training set.

Context Registers and Values (CSV)

This field allows you to specify values of context registers. Addresses will only be added to the training/test sets if they agree with these values, and the disassembly action on the Potential Functions Table will apply the context register values first. This field accepts CSVs of the form "creg1=x,creg2=y,...". For example, to restrict Thumb mode in an ARM program, you would enter "TMode=1" in this field.

Include Preceding and Following

If this is selected, for every function entry in the training set, the code units immediately before it and after it are added to the training set as negative examples (and similarly for the test set).

Include Bit Features

If this is selected, a binary feature is added to the feature vector for each bit in the recorded bytes.

Minimum Function Size

This value is the minimum size a function must be for its entry and interior to be included in the training and test sets.

Function Information

This panel displays information about the functions in the program.

Functions Meeting Size Bound

This field displays the number of functions meeting the size bound in the Minimum Function Size field. You can use this to ensure that the value in Maximum Number of Starts field doesn't cause all starts to be used for training (leaving none for testing).

Restrict Search to Aligned Addresses

If this is checked, only addresses which are zero modulo the value in the Alignment Modulus combo box are searched for function starts. This does not affect training or testing, but can be a useful optimization when applying models, for instance when the Function Alignment Table shows that all (known) functions in the program are aligned on 16-byte boundaries.

Alignment Modulus

The value in this combo box determines the modulus used when computing the values in the Function Alignment Table.

Function Alignment Table

The rows in this table display the number of (known) functions in the program whose address has the given remainder modulo the alignment modulus.

Model Statistics

This panel displays the statistics about the trained models as rows in a table. Actions on these rows allow you to apply the models or see the test set failures.

Apply Model Action

This action will apply the model to the program used to train it. The addresses searched consist of all addresses which are loaded, initialized, marked as executable, and not already in a function body (this set can be modified by the user via the Restrict Search to Aligned Addresses and Minimum Length of Undefined Ranges to Search options). The results are displayed in a Function Start Table.

Apply Model To... Action

This action will open a dialog to select another program in the current project and then apply the model to it. Note that the only check that the model is compatible with the selected program is that any context registers specified when training must be present in the selected program.

Debug Model Action

This action will display a Debug Model Table, which shows all of the errors encountered when applying the model to its test set.

Potential Functions Table

This table displays all addresses in the search set which the model thinks are function starts with probability at least .5. The table also shows the current "Interpretation" (e.g., undefined, instruction at start of basic block, etc) of the address along with the numbers of certain types of references to the address.

The following actions are defined on this table:

Disassemble Action

This action is enabled when at least one of the selected rows corresponds to an address with an interpretation of "Undefined". It begins disassembly at each "Undefined" address corresponding to a row in the selection.

Disassemble and Apply Context Action

This action is similar to the Disassemble Action, except it sets the context register values specified before training the model at the addresses and then disassembles.

Create Functions Action

This action is enabled whenever the selection contains at least one row whose corresponding address is the start of a basic block. This action creates functions at all such addresses.

Show Similar Function Starts Action

This action is enabled when the selection contains exactly one row. It displays a table of the function starts in the training set which are most similar to the bytes at the address of the row.

Similar Function Starts Table

This table displays the function starts in the training set which are most similar to a potential function start "from the model's point of view". Formally, similarity is measured using random forest proximity. Given a potential start p and a known start s, the similarity of p and s is the proportion of trees which end up in the same leaf node when processing p and s.

For convenience, the potential start is also displayed as a row in the table. In the Address column, its address is surrounded by asterisks.

Debug Model Table

This table has the same format as the Potential Functions Table but does not have the disassembly or function-creating actions (it does have the action to display similar function starts). It displays all addresses in the test set where the classifier made an error. Note that some in some cases, it might be the classifier which is correct and the original analysis which was wrong. A common example is a tail call which was optimized to a jump during compilation. If there is only one jump to this address, then analysis may (reasonably) think that the function is just part of the function containing the jump even though the classifier thinks the jump target is a function start.

Options

This plugin has the following options. They can be set in the Tool Options menu.

Maximum Test Set Size

This option controls the maximum size of the test sets (the test set of function starts and the test set of known non-starts which together form the model's "test set"). Each set that is larger than the maximum will be replaced with a random subset of the maximum size.

Minimum Length of Undefined Ranges to Search

This option controls the minimum length a run of undefined bytes must be in order to be searched for function starts. This is an optimization which allows you to skip the (often quite numerous) small runs of undefined bytes between adjacent functions. Note that this option has no effect on model training or evaluation.

Provided By: RandomForestFunctionFinderPlugin

Thoughts and notes

Setting it up:

- Toolchest -> File -> Install Extensions -> MachineLearning

- restart ghidra

Increasing “Start to Non-start Sampling Factors” increases training time (there’s more data to process). For a factor of 2, we can imagine that for each function, two random addreses are chosen from the interior of that function to represent a non-start.

Blind spot: data thats not code, such as switch tables or string resources. These won’t be seen in the training data, neither as a function start nor non-start. So how does the model classify this?

Results: It found five more functions. The UI makes it very easy to review and apply the recommendations.

Potential issue (I am not a ML engineer!): The extension treats all the features, such as “initial byte” values, as numeric. While byte values are physically numeric, when they encode things like instruction opcodes, I don’t think they shouldn’t necessarily be interpreted as such. For example, drastically different x86 instructions may have similar encodings. I think this may be a problem for the ML extension because a Random Forest classifier does try to exploit characteristics of numeric features, like when it tries to find the best value to branch a tree. I understand that a typical fix for this, to convert a numeric feature to a categorical featue, would be to use one-hot encoding. Then again, the extension does seem to work as-is, so my intuition may be wrong.

Features that I’d propose for an alterntive implementation:

- size of first basic block, via linear disassembly

- does first basic block contain invalid or unusual instructions?

- first N instructions, bucketed into categories, like PUSH, POP, CALL, ARITH, MEM, OTHER, etc.

- prior N bytes, bucketed into categories, like ZERO, NOP, SMALL, BIG, etc.

I’d also consider training against data, both data found in the file, and perhaps against randomly generated data. The goal here would be to better differentiate a function start from non-code data.